I have a client who was running out of disk space due to a database log which was growing exponentially. The database was set to Simple recovery, and the log was set to Truncate on Checkpoint. The log was growing hugely and had to be manually shrunk every few days.

To find out the reason for the log not truncating I ran this query.

SELECT name,log_reuse_wait , log_reuse_wait_desc FROM sys.databases

I found out that the database was not truncating due to an Active Transaction. This can be caused by a long running transaction or by a deferred transaction. http://technet.microsoft.com/en-us/library/ms345414(v=sql.105)

In the meantime the database went into Recovery mode and a number of things happened of which I am not aware. Somehow the active transaction got cleared and the client manually shrunk the log file.

We changed a few settings to minimize the damage should this happen again. The Autogrowth was set to 20% with no maximum. We change the Autogrow settings to something more reasonable, with the initial size of 5GB, and a maximum of 10GB to at least protect the other files from getting stalled due to no space on the drive.

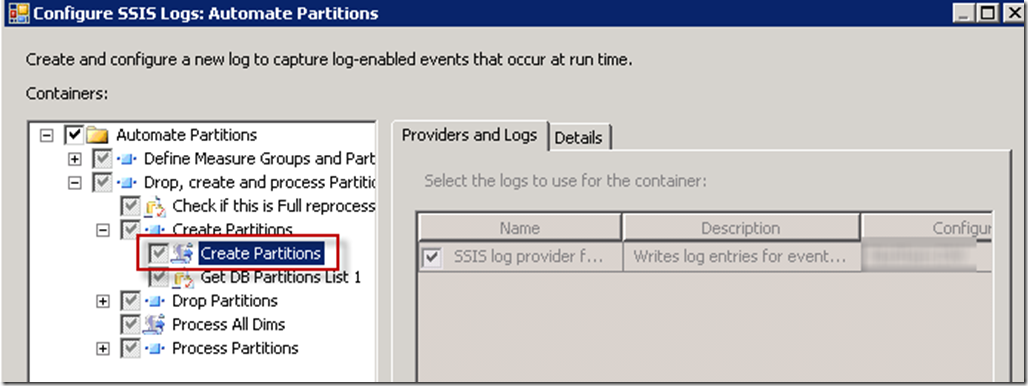

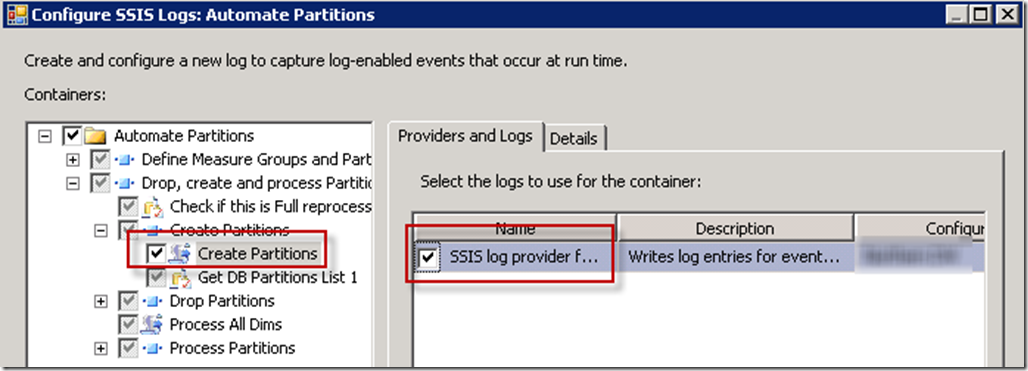

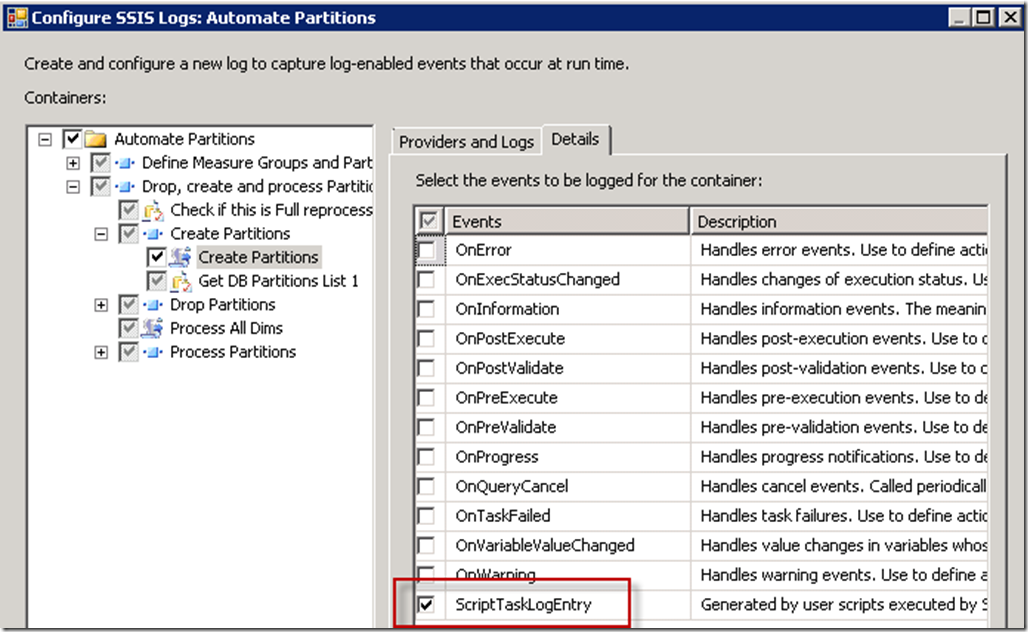

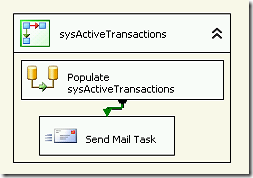

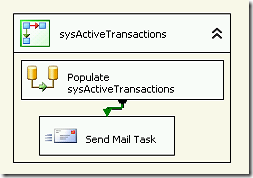

Then I looked at capturing the Active Transaction information. Unfortunately, unless the Active Transaction was running, I was unable to find out what the transaction was. I built a very simple SSIS package which I ran every 5 minutes to discover if an Active Transaction was holding up the log file, and to capture some information about what transaction was running and who was running it.

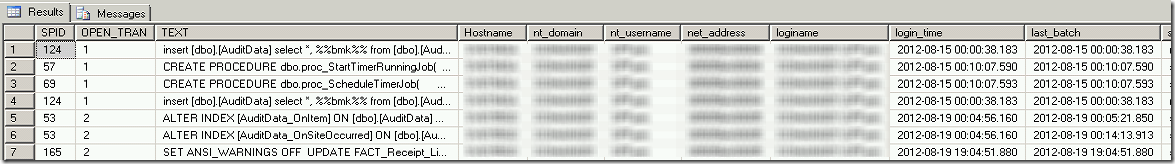

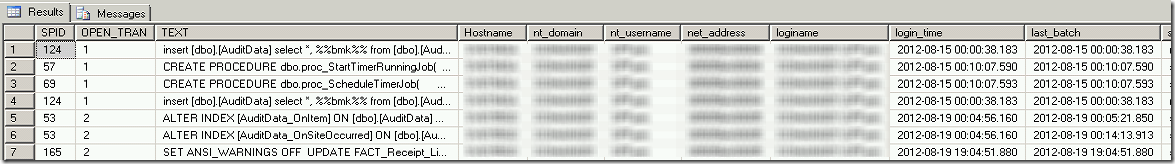

This package populated a new table in the data warehouse with the attributes of a transaction. To avoid filling the table with information I didn’t need, I wrote the query so it would only populate the table if there were any transactions holding the log open. Here is the source query for the Data Flow. Should the IF EXISTS be negative, the Data Flow task would fail causing the Send Mail Task not to be triggered.

IF EXISTS

–IF log is held up due to Active Transaction

(SELECT name, database_ID, log_reuse_wait , log_reuse_wait_desc

FROM sys.databases

WHERE log_reuse_wait_desc = ‘ACTIVE_TRANSACTION’ AND database_id = <your database id>)

–Find out the user and which query is holding it open

SELECT s.SPID,S.OPEN_TRAN,TEXT,s.Hostname,s.nt_domain,nt_username,net_address,s.loginame,

s.login_time, s.last_batch, s.status, s.sid, s.hostname as [Host_Name],

s.program_name, s.cmd, s.net_library, GETDATE() as InputDate

FROM SYS.SYSPROCESSES S CROSS APPLY SYS.DM_EXEC_SQL_TEXT(S.SQL_HANDLE)AS A

WHERE OPEN_TRAN<>0

The really nice thing about this query is that it returns the Text fo the actual query which is holding the log open, as well as program_name which is running it and the Host_Name.

I set up a SQL Server Agent job to run the package every 5 minutes, since I only wanted to capture items which were long running.

Using this package I was able to very quickly find out that there was an automated process on SharePoint which was running every hour but for whatever reason was never able to complete (that’s another story). Because the transaction didn’t successfully complete it remained flagged as Active, the Checkpoint for the log was not removed and the log just kept growing and was never truncated. Once the issue with the SharePoint job was resolved the log file was able to resume normal behaviour and Truncate on Checkpoint as expected.