I have a client whose data is large enough that their cube needs to be partitioned monthly. This process needed to be automated. I would like to thank Vidas Matelis for posting the scripts he used to do this, here. I started with his scripts and changed a number of things to get it to work in my environment. This is done in SQL 2008. Here is how it works.

1. Create schema and tables to hold the partition definitions

USE [yourdatabase]

GO

CREATE SCHEMA [config] AUTHORIZATION [dbo]

GO

CREATE TABLE [config].[SSASPartitions](

[CubeName] [varchar](100) NOT NULL,

[MeasureGroup] [varchar](100) NOT NULL,

[Partition] [varchar](100) NOT NULL,

[SQL] [varchar](3000) NOT NULL,

[MinDateKey] [int] NOT NULL,

[MaxDateKey] [int] NOT NULL,

[NeedsReProcessing] [bit] NOT NULL,

[LastProcessDate] [smalldatetime] NULL,

CONSTRAINT [PK_SSASPartitions] PRIMARY KEY CLUSTERED

(

[CubeName] ASC,

[MeasureGroup] ASC,

[Partition] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

GO

CREATE TABLE [config].[SSASPartitionsBase](

[CubeName] [varchar](100) NOT NULL,

[MeasureGroup] [varchar](100) NOT NULL,

[FactTableName] [varchar](3000) NOT NULL,

[PartitionFieldName] [varchar](100) NULL,

[TableStartDateKey] [int] NULL,

[LoadType1] [varchar](20) NULL,

[LoadType2] [varchar](20) NULL,

[LoadType3] [varchar](20) NULL,

CONSTRAINT [PK_SSASPartitionsBase] PRIMARY KEY CLUSTERED

(

[CubeName] ASC,

[MeasureGroup] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

GO

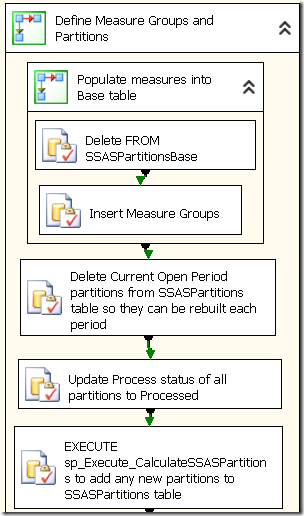

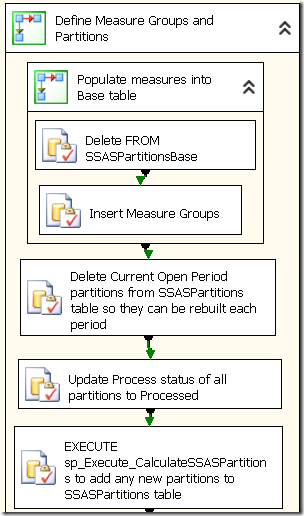

2. Add Execute SQL tasks to populate the tables with Measure Group and Partition definitions

This is the first container in the package, which simply populates the tables created in step 1.

Add these Execute SQL Tasks to your SSIS package.

2a) Delete FROM SSASPartitionsBase

Delete FROM config.SSASPartitionsBase

2b) Insert Measure Groups

Below you will find some examples of rows to be inserted into the SSASPartitionsBase table. This table is used as the base for building out the partition definitions. You will need one record for each measure group in your cube, regardless of one or many partitions. If you will require multiple partitions for a measure group, you must populate the PartitionFieldName and the TableStartDateKey so the script will know what SQL to write to define the partitions.

If you are pulling data into your cube using a query rather then directly from a table you must enter it here.

You may want to use LoadType if you will be defining different partitions for different data sets. I am not using this because any of my measure groups that need multiple partitions will use the same periods for the partitions. This will become clearer when we look at the Stored Procedure which populates the SSASPartitions table.

INSERT INTO config.SSASPartitionsBase

(

CubeName,

MeasureGroup,

FactTableName,

PartitionFieldName,

TableStartDateKey,

LoadType1)

-----------------------------------------

--Single partition does not require PartitionFieldName or TableStartDateKey

-----------------------------------------

--FACT table with single partition

-----------------------------------------

SELECT 'Sales and Inventory' as CubeName,

'Planning' as MeasureGroup,

'FACT_Planning' as FactTableName,

NULL as PartitionFieldName,

NULL as TableStartDateKey,

'Planning' as LoadType1

UNION

--FACT table with multiple partitions

-----------------------------------------

SELECT 'Sales and Inventory' as CubeName,

'Inv OH' as MeasureGroup,

'FACT_Inv_OH' as FactTableName,

'Date' as PartitionFieldName, --Required

'20070101' as TableStartDateKey, --Required

'Inventory' as LoadType1

UNION

--View on Fact Tables with multiple partitions

-----------------------------------------

SELECT 'Sales and Inventory' as CubeName,

'Sales' as MeasureGroup,

'vwFACT_Sales' as FactTableName, --Required

'Transaction_Date' as PartitionFieldName, --Required

'20070101' as TableStartDateKey,

'Sales' as LoadType1

UNION

--Query on a FACT table with single partition

--no PartitionFieldName or TableStartDateKey

-----------------------------------------

SELECT 'Sales and Inventory' as CubeName,

'Purchase Orders' as MeasureGroup,

'(SELECT Expected_Receipt_Date, Product_ID,

SUM(Due_In_Quantity) AS Open_PO_DueTW_Quantity

FROM FACT_PO AS PO WHERE (Due_In_Quantity > 0)

AND (Expected_Receipt_Date <= GETDATE())

GROUP BY Expected_Receipt_Date, Product_ID) X'

as FactTableName,

NULL as PartitionFieldName,

NULL as TableStartDateKey,

'PO' as LoadType1

2c) Delete Current Open Period partitions

Because I have other SSIS packages that process the Open Period during the week, I need to have one partition in each multi-partition measure group that has a consistent name. I have a naming convention like “Sales Current Open Period” or “Inv OH Current Open Period”. The definition of the Current Open Period changes periodically, as a new monthly partition is created. For example I might have a Current Open Period of Nov 1 to current day. Let’s on November 30 a partition is saved off and the Current Open Period becomes Dec 1 to current day. The Current Open Period partition needs to be dropped and recreated each time the script is run, to be sure that any new definition will be captured and processed. Dropping it from the SSASPartitions table ensure that it will be reinserted with the updated definition.

Delete

FROM [config].[SSASPartitions]

WHERE Partition like '%Open Period'

2d) Update Process status of all partitions to be processed

Any single partition measure groups need to be reprocessed every time the script is run. We can identify these measure groups as those where MinDateKey=0. This task flags these single partition measure groups to be processed.

UPDATE config.SSASPartitions

SET NeedsReProcessing = 1

WHERE MinDateKey=0

2e) Execute sp_Execute_CalculateSSASPartitions to add any new partitions to the SSASPartitions table

This stored procedure kicks off a stored procedure which adds any new partitions to the SSASPartitions table.

EXECUTE sp_CalculateSSASPartitions

Stored Procedure

This is the stored procedure which uses the SSASPartitionsBase table to populate the SSASPartitions table.

USE [yourdatabase]

GO

/****** Object: StoredProcedure [dbo].[sp_CalculateSSASPartitions] Script Date: 05/12/2014 15:45:17 ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE PROCEDURE [dbo].[sp_CalculateSSASPartitions]

WITH RECOMPILE

AS

BEGIN

SET NOCOUNT ON

PRINT 'Executing SP: CalculateSSASPartitions'

-- ---------------------------------------------------------------------------------------

-- ---------------------------------------------------------------------------------------

-- ---------------------------------------------------------------------------------------

--Create temp table

IF OBJECT_ID('tempdb..#tmpPart') IS NOT NULL DROP TABLE #tmpPart

CREATE TABLE #tmpPart (

CubeName varchar(100) NOT NULL

, MeasureGroup varchar(100) NOT NULL

, [Partition] varchar(200) NOT NULL

, [SQL] varchar(3000) NOT NULL

, MinDateKey int NOT NULL

, MaxDateKey int NOT NULL

)

-----------Partitions using Archive tables-----------

INSERT INTO #tmpPart(CubeName, MeasureGroup, [Partition], [SQL], MinDateKey, MaxDateKey)

SELECT

P.CubeName,

P.MeasureGroup,

P.MeasureGroup + ' ' + D.PartitionName AS Partition,

'SELECT * FROM ' +

CASE WHEN D.PartitionName = 'Current Open Period' THEN P.FactTableName ELSE P.FactTableName + '_Archive' END

+ ' WHERE ' + P.PartitionFieldName

+ CASE WHEN MinDateKey = MaxDateKey THEN '=' + CONVERT(varchar, MinDateKey)

ELSE ' BETWEEN ''' + CONVERT(varchar, D.MinDateKey) + ''' AND ''' + CONVERT(varchar, D.MaxDateKey) + ''''

END

AS SQL,

CASE WHEN D.MinDateKey < P.TableStartDateKey THEN P.TableStartDateKey ELSE D.MinDateKey END AS MinDateKey,

D.MaxDateKey

FROM config.SSASPartitionsBase P

JOIN (

--Add FiscalYear/Month partitions up to Closed Period

SELECT CAST(FiscalMonthID as varchar) AS PartitionName, MIN(DateID) AS MinDateKey, MAX(DateID) AS MaxDateKey

FROM DIM_Date

WHERE FiscalYearID >= (SELECT DISTINCT FiscalYearID FROM DIM_Date WHERE TheDate = CAST(DATEADD(year, -3, GETDATE()) as Date))

AND TheDate <= (SELECT MAX(ClosedDate) FROM DIM_Closed_Period WHERE DataArchived = 1)

GROUP BY FiscalMonthID

UNION ALL

--Current Open Period

SELECT 'Current Open Period' AS PartitionName, MIN(DateID) AS MinDateKey, MAX(DateID) AS MaxDateKey

FROM DIM_Date

WHERE TheDate > (SELECT MAX(ClosedDate) FROM DIM_Closed_Period WHERE DataArchived = 1)

AND TheDate <= (SELECT MAX(ClosedDate) as MaxClosedDate FROM DIM_Closed_Period)

) D ON D.MaxDateKey >= P.TableStartDateKey

WHERE P.MeasureGroup IN ('Inv OH Orig', 'Inv OH','Sales','Sales Customer','Sales Transaction','Sales Cost')

ORDER BY D.PartitionName

-- -------------------------------------------------

-- Add measure groups that will not be partitioned

INSERT INTO #tmpPart(CubeName, MeasureGroup, [Partition], [SQL], MinDateKey, MaxDateKey)

SELECT P.CubeName, P.MeasureGroup, P.MeasureGroup AS Partition

, 'SELECT * FROM ' + P.FactTableName AS SQL

, 0 AS MinDateKey, 999999 AS MaxDateKey

FROM config.SSASPartitionsBase P

WHERE ISNULL(PartitionFieldName,'') = ''

-- Safety. If we miss any measure groups, add records as if they were not partitioned.

INSERT INTO #tmpPart(CubeName, MeasureGroup, [Partition], [SQL], MinDateKey, MaxDateKey)

SELECT CubeName, MeasureGroup, MeasureGroup AS [Partition], 'SELECT * FROM ' + FactTableName AS [SQL]

, 0 MinDateKey, 999999 MaxDateKey

FROM config.SSASPartitionsBase B

WHERE NOT EXISTS(SELECT * FROM #tmpPart T WHERE T.MeasureGroup = B.MeasureGroup)

-- ----------------------------------------------------------------------------------------------------------------------------------

DELETE FROM config.SSASPartitions

FROM config.SSASPartitions P

JOIN config.SSASPartitionsBase B ON B.CubeName = P.CubeName AND B.MeasureGroup = P.MeasureGroup

WHERE

-- (B.LoadType1 = @Action OR B.LoadType2 = @Action OR B.LoadType3 = @Action) AND

NOT EXISTS(SELECT * FROM #tmpPart T WHERE T.CubeName = P.CubeName AND T.MeasureGroup = P.MeasureGroup

AND T.Partition = P.Partition)

PRINT 'Deleted partitions that do not exists in new list. Record count: ' + CONVERT(varchar, @@ROWCOUNT)

INSERT INTO config.SSASPartitions(CubeName, MeasureGroup, [Partition], [SQL]

, MinDateKey, MaxDateKey, NeedsReProcessing)

SELECT CubeName, MeasureGroup, [Partition], [SQL]

, MinDateKey, MaxDateKey, 1 AS NeedsReProcessing

FROM #tmpPart T

WHERE NOT EXISTS(SELECT * FROM config.SSASPartitions P

WHERE P.CubeName = T.CubeName AND P.MeasureGroup = T.MeasureGroup

AND P.Partition = T.Partition

)

PRINT 'Inserted partitions that do not exists. Record count: ' + CONVERT(varchar, @@ROWCOUNT)

END

GO

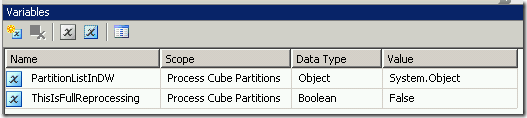

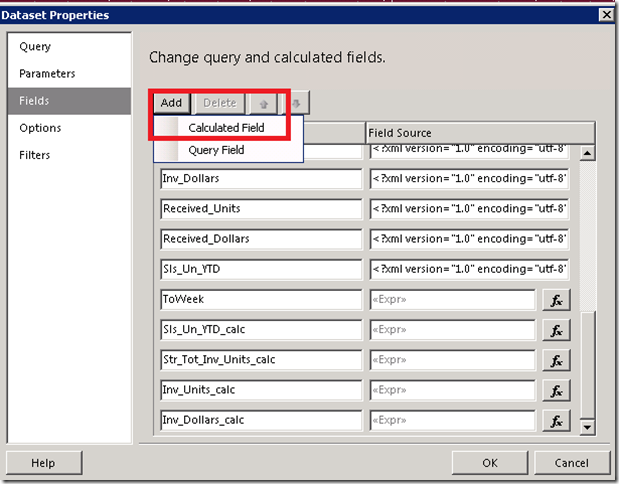

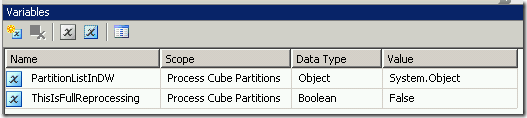

3. Create Two Variables in your package

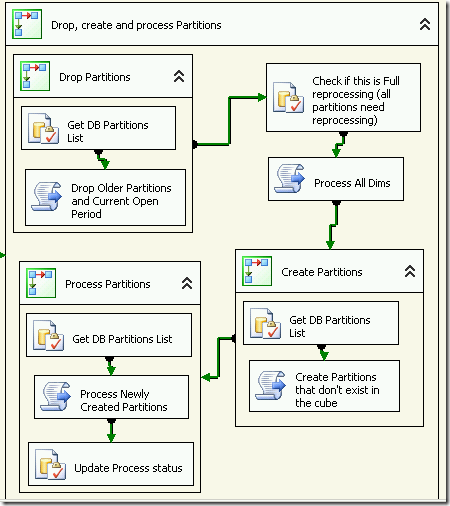

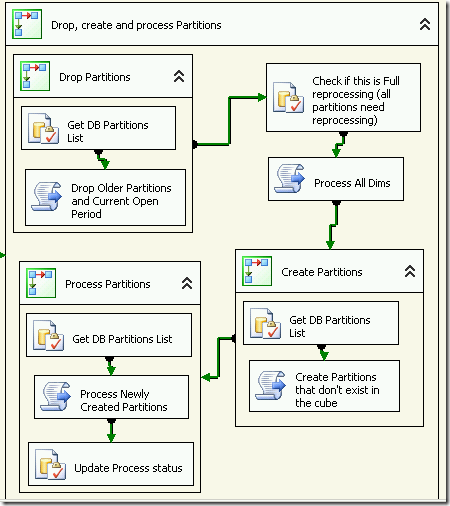

4.Add tasks to Drop, Create and Process Partitions

This is the second and final container that uses the information created in the first steps to actually drop, create and process any partitions which are new or which need to be reprocessed.

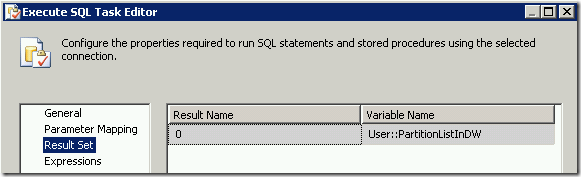

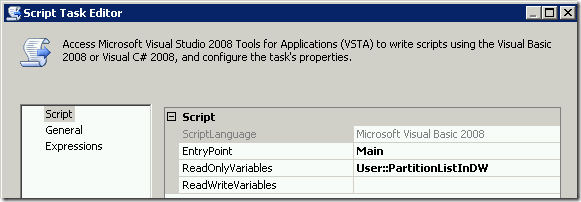

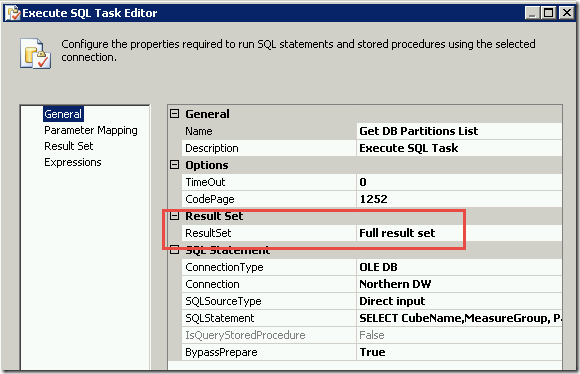

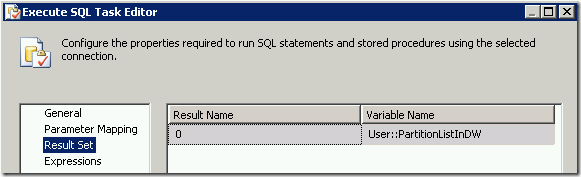

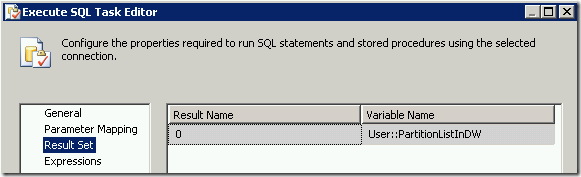

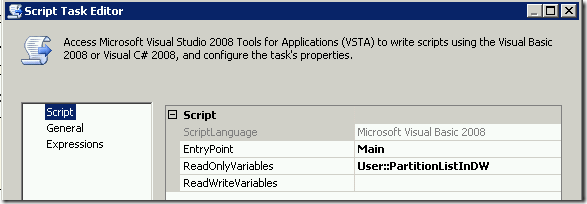

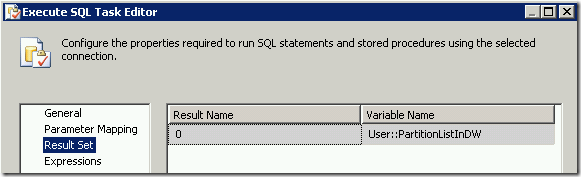

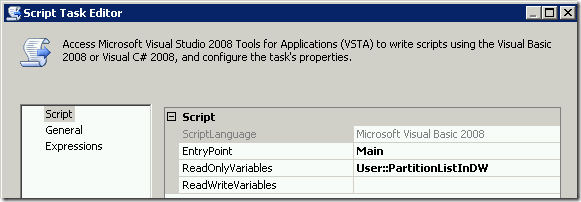

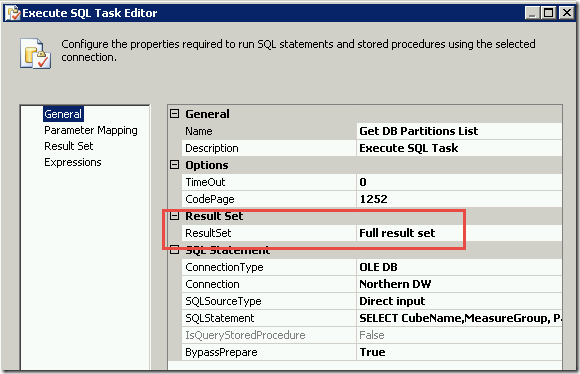

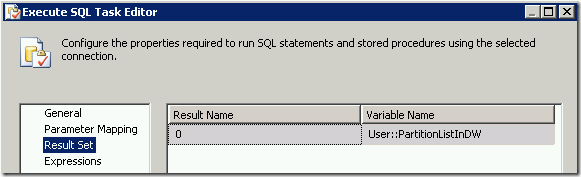

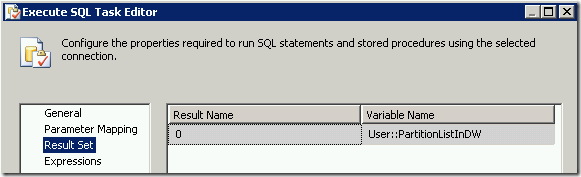

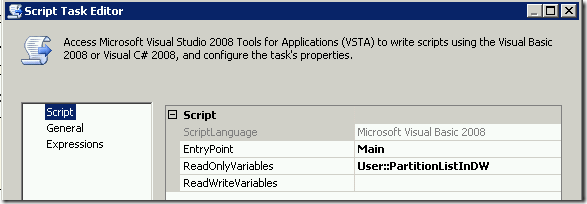

4a) Get DB Partitions List

SELECT CubeName,MeasureGroup, Partition, SQL, NeedsReProcessing

FROM config.SSASPartitions

ORDER BY 1,2,3

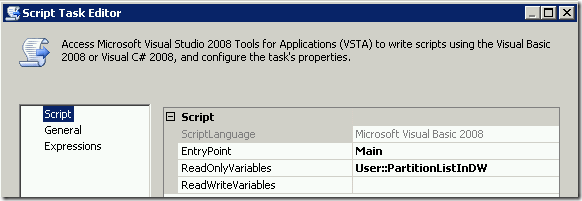

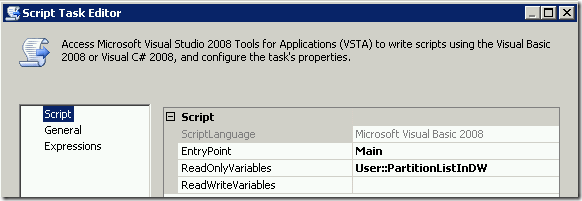

4b) Drop Older Partitions and Current Open Partitions

' Microsoft SQL Server Integration Services Script Task

' Write scripts using Microsoft Visual Basic 2008.

' The ScriptMain is the entry point class of the script.

Imports System

Imports System.Data

Imports System.Math

Imports Microsoft.SqlServer.Dts.Runtime

Imports System.Xml

Imports System.Data.OleDb

Imports Microsoft.AnalysisServices

<System.AddIn.AddIn("ScriptMain", Version:="1.0", Publisher:="", Description:="")> _

<System.CLSCompliantAttribute(False)> _

Partial Public Class ScriptMain

Inherits Microsoft.SqlServer.Dts.Tasks.ScriptTask.VSTARTScriptObjectModelBase

Enum ScriptResults

Success = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Success

Failure = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Failure

End Enum

' The execution engine calls this method when the task executes.

' To access the object model, use the Dts property. Connections, variables, events,

' and logging features are available as members of the Dts property as shown in the following examples.

'

' To reference a variable, call Dts.Variables("MyCaseSensitiveVariableName").Value

' To post a log entry, call Dts.Log("This is my log text", 999, Nothing)

' To fire an event, call Dts.Events.FireInformation(99, "test", "hit the help message", "", 0, True)

'

' To use the connections collection use something like the following:

' ConnectionManager cm = Dts.Connections.Add("OLEDB")

' cm.ConnectionString = "Data Source=localhost;Initial Catalog=AdventureWorks;Provider=SQLNCLI10;Integrated Security=SSPI;Auto Translate=False;"

'

' Before returning from this method, set the value of Dts.TaskResult to indicate success or failure.

'

' To open Help, press F1.

Public Sub Main()

'--------------------------------------------------------------------------------------

'THIS PACKAGE WILL DROP ANY MISSING PARTITIONS AND ANY "CURRENT OPEN PERIOD" PARTITIONS

'--------------------------------------------------------------------------------------

Dim ExecutionSuccessfull As Boolean = True ' If true, package executed without errors

Dim PartListInDM(999, 2) As String ' expecting no more than a 1000 partitions.

Dim DropPartCount As Integer = 0

' These objects capture execution results

Dim oResults As Microsoft.AnalysisServices.XmlaResultCollection

Dim oResult As Microsoft.AnalysisServices.XmlaResult

Dim oMessage As Microsoft.AnalysisServices.XmlaMessage

Dim dataBytes(0) As Byte

' These objects capture your partitions table

Dim oOleDB As New OleDbDataAdapter

Dim oTable As New DataTable

Dim oRow As DataRow

oOleDB.Fill(oTable, Dts.Variables("PartitionListInDW").Value)

'Connect to SSAS database

Dim oConnection As ConnectionManager

oConnection = Dts.Connections("Cube")

Dim sServer As String = CStr(oConnection.Properties("ServerName").GetValue(oConnection))

Dim sDatabase As String = CStr(oConnection.Properties("InitialCatalog").GetValue(oConnection))

Dim oServer As New Microsoft.AnalysisServices.Server

oServer.Connect(sServer) ' connect to the server and start scanning down the object hierarchy

Dim oDB As Microsoft.AnalysisServices.Database = oServer.Databases.FindByName(sDatabase)

If oDB Is Nothing Then

Dts.Log("Did not find expected database: " & sDatabase, 0, dataBytes) 'You need to setup custom package logging to see this

ExecutionSuccessfull = False

GoTo Done

End If

'Cube attributes

Dim oCube As Microsoft.AnalysisServices.Cube

Dim oMeasureGroup As Microsoft.AnalysisServices.MeasureGroup

Dim oPartition As Microsoft.AnalysisServices.Partition

Dim bNeedToDropPartition As Boolean

Dim strCube As String 'Cube - Cube Name

Dim strMeasureGroup As String 'Cube - Measure Group

Dim strPartition As String 'Cube - Partition

Dim strRowCube As String 'Table - Cube Name

Dim strRowMeasureGroup As String 'Table - Measure Group

Dim strRowPartition As String 'Table - Partition

Dim bMatch As Boolean 'String match

'Iterate through each partition in the cube

For Each oCube In oDB.Cubes

strCube = oCube.Name

For Each oMeasureGroup In oCube.MeasureGroups

strMeasureGroup = oMeasureGroup.Name

For Each oPartition In oMeasureGroup.Partitions

strPartition = oPartition.Name

bNeedToDropPartition = True

'Sets bMatch to True or False. If Partition name like '%Current Open Period' then bMatch = True

bMatch = strPartition Like "*Current Open Period*"

'Iterate through each partition in the table and check if it exists in the cube already.

For Each oRow In oTable.Rows

strRowCube = oRow("CubeName").ToString

strRowMeasureGroup = oRow("MeasureGroup").ToString

strRowPartition = oRow("Partition").ToString

'If the partition exists then don't drop it, except "Current Open Period" partitions

If strRowCube = strCube And strRowMeasureGroup = strMeasureGroup _

And strRowPartition = strPartition And bMatch = "False" Then

bNeedToDropPartition = False

Dts.Log("PARTITION FOUND - don't drop" & strRowPartition & " Partition : " & strPartition, 0, dataBytes)

Exit For

End If

Next

'If the Partition does need to be dropped, then add the Partition details to the PartListInDM string object

If bNeedToDropPartition Then

PartListInDM(DropPartCount, 0) = oCube.Name

PartListInDM(DropPartCount, 1) = oMeasureGroup.Name

PartListInDM(DropPartCount, 2) = oPartition.Name

DropPartCount = DropPartCount + 1

Dts.Log("Found partition that needs to be dropped: " & oPartition.Name, 0, dataBytes) ' You need to setup package logging to see this

End If

Next

Next

Next

'Write the XML based on the PartListInDM string object to drop the Partitions

'-------------------

'START CAPTURING XML

'-------------------

oServer.CaptureXml() = True

Dim i As Integer = 0

For i = 0 To DropPartCount - 1

oCube = oDB.Cubes.FindByName(PartListInDM(i, 0))

oMeasureGroup = oCube.MeasureGroups.FindByName(PartListInDM(i, 1))

oPartition = oMeasureGroup.Partitions.FindByName(PartListInDM(i, 2))

oPartition.Drop()

Next i

oServer.CaptureXml() = False

'-------------------

'STOP CAPTURING XML

'-------------------

'Execute the log that contains the XMLA commands and drop the Partitions

oResults = oServer.ExecuteCaptureLog(True, False) 'Execute within transaction=True, Execute in parallel=False

'Log errors and warnings

For Each oResult In oResults

For Each oMessage In oResult.Messages

If oMessage.GetType.Name = "XmlaError" Then

'Errors

Dts.Log(oMessage.Description, 0, dataBytes) 'You need to setup custom package logging to see this

ExecutionSuccessfull = False 'Fail the Scrip Task

Else

'Warnings

Dts.Log(oMessage.Description, 0, dataBytes) 'You need to setup custom package logging to see this

ExecutionSuccessfull = True 'Don't fail the Scrip Task

End If

Next oMessage

Next oResult

' -------------------------------------------------------------------------------------------------------------------------

Done:

oServer.Disconnect()

If ExecutionSuccessfull Then

Dts.TaskResult = ScriptResults.Success

Else

Dts.TaskResult = ScriptResults.Failure

End If

End Sub

End Class

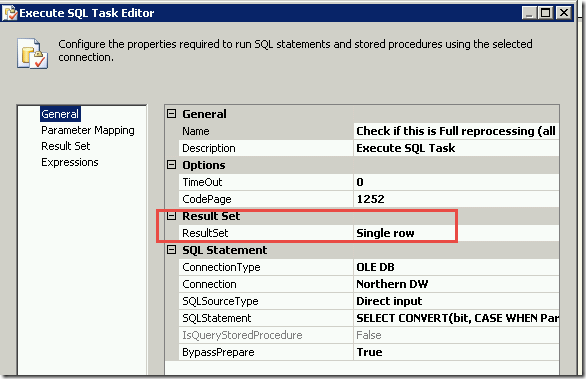

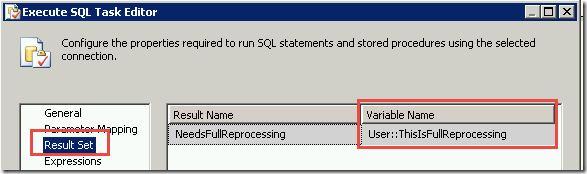

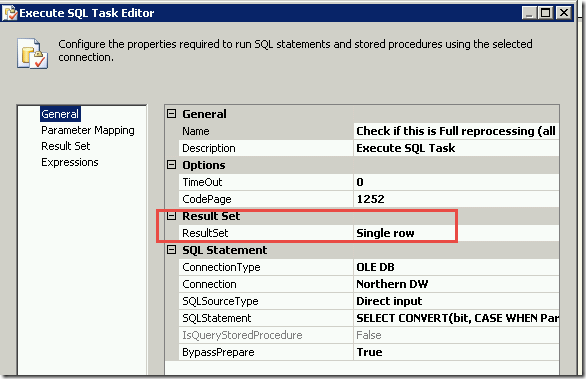

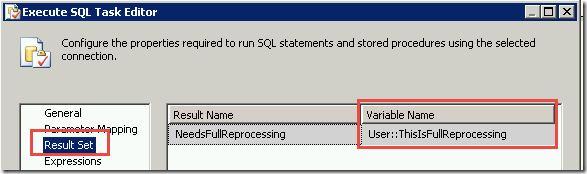

4c) Check if this is Full Reprocessing

This will check to see if the full cube will be reprocessed, or just some of the partitions.

SELECT CONVERT(bit, CASE WHEN PartCount = 0 THEN 1 ELSE 0 END) AS NeedsFullReprocessing

FROM (SELECT COUNT(*) AS PartCount FROM config.SSASPartitions WHERE NeedsReProcessing <> 1) B

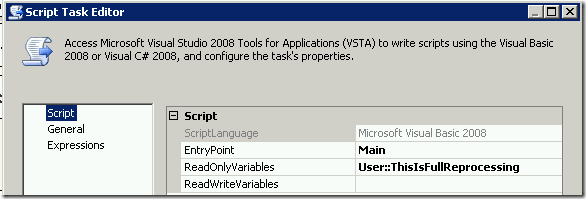

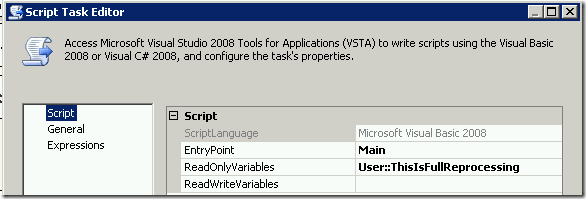

4d) Process All Dims

This will always process all dimensions.

' Microsoft SQL Server Integration Services Script Task

' Write scripts using Microsoft Visual Basic 2008.

' The ScriptMain is the entry point class of the script.

Imports System

Imports System.Data

Imports System.Math

Imports Microsoft.SqlServer.Dts.Runtime

Imports Microsoft.AnalysisServices

<System.AddIn.AddIn("ScriptMain", Version:="1.0", Publisher:="", Description:="")> _

<System.CLSCompliantAttribute(False)> _

Partial Public Class ScriptMain

Inherits Microsoft.SqlServer.Dts.Tasks.ScriptTask.VSTARTScriptObjectModelBase

Enum ScriptResults

Success = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Success

Failure = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Failure

End Enum

' The execution engine calls this method when the task executes.

' To access the object model, use the Dts property. Connections, variables, events,

' and logging features are available as members of the Dts property as shown in the following examples.

'

' To reference a variable, call Dts.Variables("MyCaseSensitiveVariableName").Value

' To post a log entry, call Dts.Log("This is my log text", 999, Nothing)

' To fire an event, call Dts.Events.FireInformation(99, "test", "hit the help message", "", 0, True)

'

' To use the connections collection use something like the following:

' ConnectionManager cm = Dts.Connections.Add("OLEDB")

' cm.ConnectionString = "Data Source=localhost;Initial Catalog=AdventureWorks;Provider=SQLNCLI10;Integrated Security=SSPI;Auto Translate=False;"

'

' Before returning from this method, set the value of Dts.TaskResult to indicate success or failure.

'

' To open Help, press F1.

Public Sub Main()

'

Dim ExecutionSuccessfull As Boolean = True ' If true, package executed without errors

Dim dataBytes(0) As Byte

' Dim sProcessType As String = "ProcessFull"

Dim oConnection As ConnectionManager

oConnection = Dts.Connections("Cube")

Dim sServer As String = CStr(oConnection.Properties("ServerName").GetValue(oConnection))

Dim sDatabaseID As String = CStr(oConnection.Properties("InitialCatalog").GetValue(oConnection))

Dim oServer As New Microsoft.AnalysisServices.Server

oServer.Connect(sServer) ' connect to the server and start scanning down the object hierarchy

Dim oDB As Microsoft.AnalysisServices.Database = oServer.Databases.FindByName(sDatabaseID)

Dim oThisIsFullReprocessing As Variable = Dts.Variables("ThisIsFullReprocessing") ' From variable

Dim ThisIsFullReprocessing As Boolean = CType(oThisIsFullReprocessing.Value, Boolean)

Dim ProcessType As Microsoft.AnalysisServices.ProcessType

If ThisIsFullReprocessing Then

ProcessType = Microsoft.AnalysisServices.ProcessType.ProcessFull

Dts.Log("Will be doing FULL dimension processing", 0, dataBytes) ' You need to setup proper package loging to see this!

Else

ProcessType = Microsoft.AnalysisServices.ProcessType.ProcessUpdate

Dts.Log("Will be doing UPDATE dimension processing", 0, dataBytes) ' You need to setup proper package loging to see this!

End If

If oDB Is Nothing Then

ExecutionSuccessfull = False

GoTo Done

Else

Dim oDim As Microsoft.AnalysisServices.Dimension

oServer.CaptureXml() = True ' Start capturing XML.

For Each oDim In oDB.Dimensions

' This will generate XMLA, but because CaptureXML is True, will not execute it!

If (oDim.MiningModel Is Nothing) Then

oDim.Process(ProcessType)

End If

Next

oServer.CaptureXml() = False ' Stop capturing XML

' Execute captured XML. First parameter Transactional, second parameter Parallel, third optional parameter: processAffected

' These are very important parameters!

Dim oResults As Microsoft.AnalysisServices.XmlaResultCollection

Dim oResult As Microsoft.AnalysisServices.XmlaResult

oResults = oServer.ExecuteCaptureLog(True, True)

'oResults = oServer.ExecuteCaptureLog(False, False)

Dim oMessage As Microsoft.AnalysisServices.XmlaMessage

Dim bt(0) As Byte

'Log the errors and warnings

For Each oResult In oResults

For Each oMessage In oResult.Messages

If oMessage.GetType.Name = "XmlaError" Then

Dts.Log(oMessage.Description, 0, bt)

'The processing failed

ExecutionSuccessfull = False

Else

'It's just a warning.

Dts.Log(oMessage.Description, 0, bt)

ExecutionSuccessfull = True ' if you want to fail on warning, change this to False

End If

Next oMessage

Next oResult

End If

Done:

oServer.Disconnect() ' disconnect from the server -- we are done

If ExecutionSuccessfull Then

Dts.TaskResult = ScriptResults.Success

Else

Dts.TaskResult = ScriptResults.Failure

End If

'

End Sub

End Class

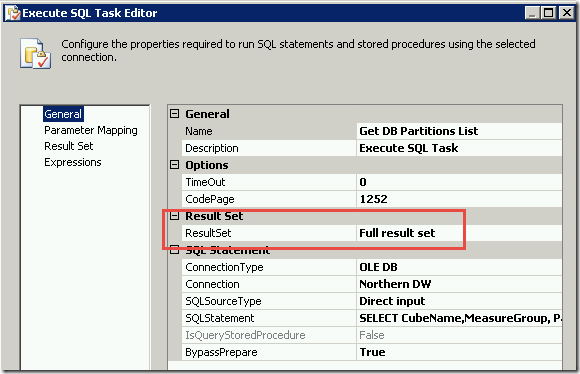

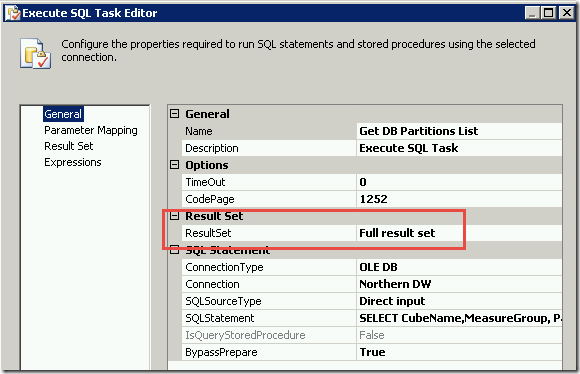

4e) Get DB Partitions List

SELECT CubeName,MeasureGroup, Partition, SQL, NeedsReProcessing

FROM config.SSASPartitions

ORDER BY 1,2,3

4f) Create Partitions that don’t exist in the cube

' Microsoft SQL Server Integration Services Script Task

' Write scripts using Microsoft Visual Basic 2008.

' The ScriptMain is the entry point class of the script.

Imports System

Imports System.Data

Imports System.Math

Imports System.Xml

Imports System.Data.OleDb

Imports Microsoft.SqlServer.Dts.Runtime

Imports Microsoft.AnalysisServices

<System.AddIn.AddIn("ScriptMain", Version:="1.0", Publisher:="", Description:="")> _

<System.CLSCompliantAttribute(False)> _

Partial Public Class ScriptMain

Inherits Microsoft.SqlServer.Dts.Tasks.ScriptTask.VSTARTScriptObjectModelBase

Enum ScriptResults

Success = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Success

Failure = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Failure

End Enum

' The execution engine calls this method when the task executes.

' To access the object model, use the Dts property. Connections, variables, events,

' and logging features are available as members of the Dts property as shown in the following examples.

'

' To reference a variable, call Dts.Variables("MyCaseSensitiveVariableName").Value

' To post a log entry, call Dts.Log("This is my log text", 999, Nothing)

' To fire an event, call Dts.Events.FireInformation(99, "test", "hit the help message", "", 0, True)

'

' To use the connections collection use something like the following:

' ConnectionManager cm = Dts.Connections.Add("OLEDB")

' cm.ConnectionString = "Data Source=localhost;Initial Catalog=AdventureWorks;Provider=SQLNCLI10;Integrated Security=SSPI;Auto Translate=False;"

'

' Before returning from this method, set the value of Dts.TaskResult to indicate success or failure.

'

' To open Help, press F1.

Public Sub Main()

'------------------------------------------------------------------------

'THIS SCRIPT TASK WILL CREATE ANY PARTITIONS IN THE LIST THAT DON'T EXIST

'------------------------------------------------------------------------

Dim ExecutionSuccessfull As Boolean = True ' If true, package executed without errors

' These objects capture execution results

Dim oResults As Microsoft.AnalysisServices.XmlaResultCollection

Dim oResult As Microsoft.AnalysisServices.XmlaResult

Dim oMessage As Microsoft.AnalysisServices.XmlaMessage

Dim dataBytes(0) As Byte

' These objects capture partitions table columns

Dim oOleDB As New OleDbDataAdapter

Dim oTable As New DataTable

Dim oRow As DataRow

oOleDB.Fill(oTable, Dts.Variables("PartitionListInDW").Value)

'Connect to SSAS Database

Dim oConnection As ConnectionManager

oConnection = Dts.Connections("Cube")

Dim sServer As String = CStr(oConnection.Properties("ServerName").GetValue(oConnection))

Dim sDatabase As String = CStr(oConnection.Properties("InitialCatalog").GetValue(oConnection))

Dim oServer As New Microsoft.AnalysisServices.Server

oServer.Connect(sServer) ' connect to the server and start scanning down the object hierarchy

Dim oDB As Microsoft.AnalysisServices.Database = oServer.Databases.FindByName(sDatabase)

If oDB Is Nothing Then

Dts.Log("Did not find expected database: " & sDatabase, 0, dataBytes) 'You need to setup custom package logging to see this

ExecutionSuccessfull = False

GoTo Done

End If

'Cube attributes

Dim oCube As Microsoft.AnalysisServices.Cube

Dim oMeasureGroup As Microsoft.AnalysisServices.MeasureGroup

Dim oPartition As Microsoft.AnalysisServices.Partition

Dim strRowCube As String

Dim strRowMeasureGroup As String

Dim strRowPartition As String

'-------------------

'START CAPTURING XML

'-------------------

oServer.CaptureXml() = True

For Each oRow In oTable.Rows

strRowCube = oRow("CubeName").ToString

oCube = oDB.Cubes.FindByName(strRowCube)

If oCube Is Nothing Then

Dts.Log("Did not find cube: " & strRowCube, 0, dataBytes) 'You need to setup custom package logging to see this

Continue For

End If

strRowMeasureGroup = oRow("MeasureGroup").ToString

oMeasureGroup = oCube.MeasureGroups.FindByName(strRowMeasureGroup)

If oMeasureGroup Is Nothing Then

Dts.Log("Did not find measure group: " & strRowMeasureGroup, 0, dataBytes) 'You need to setup custom package logging to see this

Continue For

End If

strRowPartition = oRow("Partition").ToString

oPartition = oMeasureGroup.Partitions.FindByName(strRowPartition)

'If the Partition doesn't exist in the cube, then capture create it

If (oPartition Is Nothing) Then

Dts.Log("Need to create partition: " & strRowPartition, 0, dataBytes) 'You need to setup custom package logging to see this

oPartition = oMeasureGroup.Partitions.Add(strRowPartition)

oPartition.StorageMode = StorageMode.Molap

oPartition.Source = New QueryBinding(oDB.DataSources(0).ID, oRow("SQL").ToString)

If oMeasureGroup.AggregationDesigns.Count > 0 Then '

oPartition.AggregationDesignID = oMeasureGroup.AggregationDesigns(0).ID ' Take first available aggregation design

End If

'Capture XML to add the Partition

oPartition.Update(UpdateOptions.ExpandFull)

Dts.Log("Partition created: " & strRowPartition, 0, dataBytes) 'You need to setup custom package logging to see this

End If

Next

oServer.CaptureXml() = False

'-------------------

'STOP CAPTURING XML

'-------------------

'Execute the log that contains the XMLA commands and drop the Partitions

oResults = oServer.ExecuteCaptureLog(True, False) 'Execute within transaction=True, Execute in parallel=False

'Log the errors and warnings

For Each oResult In oResults

For Each oMessage In oResult.Messages

If oMessage.GetType.Name = "XmlaError" Then

'Errors

Dts.Log(oMessage.Description, 0, dataBytes) 'You need to setup custom package logging to see this

ExecutionSuccessfull = False 'Fail the Script Task

Else

'Warnings

Dts.Log(oMessage.Description, 0, dataBytes) 'You need to setup custom package logging to see this

ExecutionSuccessfull = True 'Don't fail the Script Task

End If

Next oMessage

Next oResult

' -------------------------------------------------------------------------------------------------------------------------

Done:

oServer.Disconnect()

If ExecutionSuccessfull Then

Dts.TaskResult = ScriptResults.Success

Else

Dts.TaskResult = ScriptResults.Failure

End If

End Sub

End Class

4g) Get DB Partitions List

SELECT CubeName,MeasureGroup, Partition, SQL, NeedsReProcessing

FROM config.SSASPartitions

ORDER BY 1,2,3

4h) Process Newly Created Partitions

' Microsoft SQL Server Integration Services Script Task

' Write scripts using Microsoft Visual Basic 2008.

' The ScriptMain is the entry point class of the script.

Imports System

Imports System.Data

Imports System.Math

Imports System.Xml

Imports System.Data.OleDb

Imports Microsoft.SqlServer.Dts.Runtime

Imports Microsoft.AnalysisServices

<System.AddIn.AddIn("ScriptMain", Version:="1.0", Publisher:="", Description:="")> _

<System.CLSCompliantAttribute(False)> _

Partial Public Class ScriptMain

Inherits Microsoft.SqlServer.Dts.Tasks.ScriptTask.VSTARTScriptObjectModelBase

Enum ScriptResults

Success = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Success

Failure = Microsoft.SqlServer.Dts.Runtime.DTSExecResult.Failure

End Enum

' The execution engine calls this method when the task executes.

' To access the object model, use the Dts property. Connections, variables, events,

' and logging features are available as members of the Dts property as shown in the following examples.

'

' To reference a variable, call Dts.Variables("MyCaseSensitiveVariableName").Value

' To post a log entry, call Dts.Log("This is my log text", 999, Nothing)

' To fire an event, call Dts.Events.FireInformation(99, "test", "hit the help message", "", 0, True)

'

' To use the connections collection use something like the following:

' ConnectionManager cm = Dts.Connections.Add("OLEDB")

' cm.ConnectionString = "Data Source=localhost;Initial Catalog=AdventureWorks;Provider=SQLNCLI10;Integrated Security=SSPI;Auto Translate=False;"

'

' Before returning from this method, set the value of Dts.TaskResult to indicate success or failure.

'

' To open Help, press F1.

Public Sub Main()

'

'------------------------------------------------------------------------

'THIS SCRIPT TASK WILL PROCESS ANY NEWLY CREATED PARTITIONS IN THE LIST

'------------------------------------------------------------------------

Dim ExecutionSuccessfull As Boolean = True ' If true, package executed without errors

' These objects capture execution results

Dim oResults As Microsoft.AnalysisServices.XmlaResultCollection

Dim oResult As Microsoft.AnalysisServices.XmlaResult

Dim dataBytes(0) As Byte

Dim oMessage As Microsoft.AnalysisServices.XmlaMessage

' These objects capture partitions table columns

Dim oOleDB As New OleDbDataAdapter

Dim oTable As New DataTable

Dim oRow As DataRow

oOleDB.Fill(oTable, Dts.Variables("PartitionListInDW").Value)

'Connect to SSAS Database

Dim oConnection As ConnectionManager

oConnection = Dts.Connections("Cube")

Dim sServer As String = CStr(oConnection.Properties("ServerName").GetValue(oConnection))

Dim sDatabase As String = CStr(oConnection.Properties("InitialCatalog").GetValue(oConnection))

Dim oServer As New Microsoft.AnalysisServices.Server

oServer.Connect(sServer) ' connect to the server and start scanning down the object hierarchy

Dim oDB As Microsoft.AnalysisServices.Database = oServer.Databases.FindByName(sDatabase)

If oDB Is Nothing Then

Dts.Log("Did not find expected database: " & sDatabase, 0, dataBytes) 'You need to setup custom package logging to see this

ExecutionSuccessfull = False

GoTo Done

End If

'Cube attributes

Dim oCube As Microsoft.AnalysisServices.Cube

Dim oMeasureGroup As Microsoft.AnalysisServices.MeasureGroup

Dim oPartition As Microsoft.AnalysisServices.Partition

'-------------------

'START CAPTURING XML

'-------------------

oServer.CaptureXml() = True

'For each row in the table

For Each oRow In oTable.Rows

If LCase(oRow("NeedsReProcessing").ToString) = LCase("False") Then

Continue For

End If

'Find the cube

oCube = oDB.Cubes.FindByName(oRow("CubeName").ToString)

If oCube Is Nothing Then

Dts.Log("Did not find cube: " & oRow("CubeName").ToString, 0, dataBytes) 'You need to setup custom package logging to see this

Continue For

End If

'Find the MeasureGroup in the cube

oMeasureGroup = oCube.MeasureGroups.FindByName(oRow("MeasureGroup").ToString)

If oMeasureGroup Is Nothing Then

Dts.Log("Did not find measure group: " & oRow("MeasureGroup").ToString, 0, dataBytes) 'You need to setup custom package logging to see this

Continue For

End If

'Find the Partition in the Measure Group

oPartition = oMeasureGroup.Partitions.FindByName(oRow("Partition").ToString)

If (oPartition Is Nothing) Then

Dts.Log("Partition does not exists: " & oRow("Partition").ToString, 0, dataBytes) 'You need to setup custom package logging to see this

Continue For

End If

'Capture XML to Process the Partition

oPartition.Process(Microsoft.AnalysisServices.ProcessType.ProcessFull)

Next

' If the Measure Group is linked, then process the entire Measure Group

For Each oCube In oDB.Cubes

For Each oMeasureGroup In oCube.MeasureGroups

If oMeasureGroup.IsLinked Then

oMeasureGroup.Process(Microsoft.AnalysisServices.ProcessType.ProcessDefault)

End If

Next

Next

oServer.CaptureXml() = False

'-------------------

'STOP CAPTURING XML

'-------------------

'Execute the log that contains the XMLA commands and drop the Partitions

oResults = oServer.ExecuteCaptureLog(True, True) 'Execute within transaction=True, Execute in parallel=True

'Log the errors and warnings

For Each oResult In oResults

For Each oMessage In oResult.Messages

If oMessage.GetType.Name = "XmlaError" Then

'Errors

Dts.Log(oMessage.Description, 0, dataBytes) 'You need to setup custom package logging to see this

ExecutionSuccessfull = False 'Fail the Script Task

Else

'Warnings

Dts.Log(oMessage.Description, 0, dataBytes) 'You need to setup custom package logging to see this

ExecutionSuccessfull = True 'Do not fail the Script Task

End If

Next oMessage

Next oResult

' -------------------------------------------------------------------------------------------------------------------------

Done:

oServer.Disconnect()

If ExecutionSuccessfull Then

Dts.TaskResult = ScriptResults.Success

Else

Dts.TaskResult = ScriptResults.Failure

End If

End Sub

End Class

4i) Update Process Status

Indicate that all the partitions have been processed.

UPDATE config.SSASPartitions

SET NeedsReProcessing = 0

,LastProcessDate = GETDATE()

WHERE NeedsReProcessing = 1

Thanks again to Vidas Matelis. His scripts, here, were invaluable.