There are many times when my clients want to be able to refresh data on demand, without having to wait for a nightly data warehouse refresh. Quite often this occurs when they are updating data in SharePoint lists which get fed into the data warehouse and from there into cubes and reports. There is a very simple way to allow a user to trigger the data refresh of just their specific piece of data, using Nintex Workflow. If you don’t know about Nintex, it is a third party product which adds a drag-and-drop workflow designer and advanced workflow features to SharePoint. It is inexpensive, and a great way to empower users to manage their business processes.

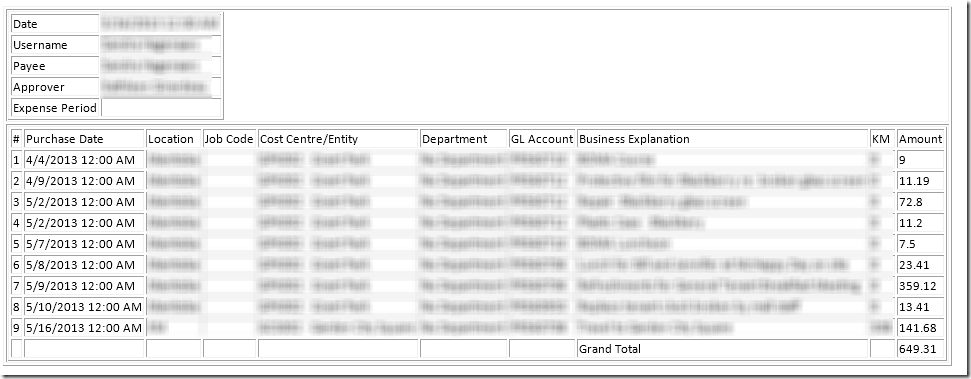

In this example a user has updated a series of SharePoint lists with current vendor scorecard data and would like to see it reflected in the cube and reports.

SSIS PACKAGES BEST PRACTICES

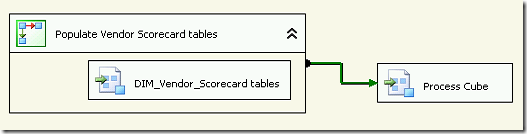

You will need to create an SSIS package which triggers the data refresh desired by the user. When building my SSIS packages which populate a data warehouse I like to keep it modular, with related actions in individual packages, and then use a master package to trigger the packages in the correct order. In this way I can trigger smaller jobs simply by creating additional master packages to run the relevant packages which already exist. This is best practice since I only have to maintain the logic in one package, which can then be used by multiple master packages. I normally create one package for each of my dimension (attribute) tables and one for each of my fact (transactional) tables wherever possible, unless they are interdependent. I try to keep each package as modular as possible so I can trigger them in different sequences where necessary.

Using a master package to trigger a sequence of packages has many advantages over doing multiple steps in SQL Server Agent. I only trigger one master package using a SQL Server Agent Job. It allows me to keep the order of running a sequence of packages in SSIS where I can add annotations to remind myself why certain packages need to be run before others. This helps me remember the interdependencies when I am making changes. It also allows me to control the checkpoints, which gives me the ability to rollback multiple steps where necessary. And it helps me when reviewing the SSIS logs. I can see how long the master package takes to run from beginning to end, and compare that over time.

CREATE A NEW SSIS MASTER PACKAGE

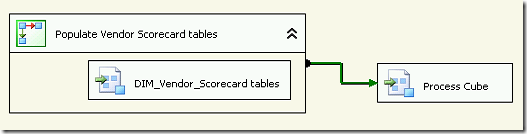

For this example I have a master package which populates the entire data warehouse and refreshes the data cube at the end. This is run nightly. I will add an additional master package, to be triggered by the user, which only refreshed the vendor scorecard data from the SharePoint lists into the data warehouse and refreshes the data cube. We’ll call it MASTER Vendor Scorecard Refresh.

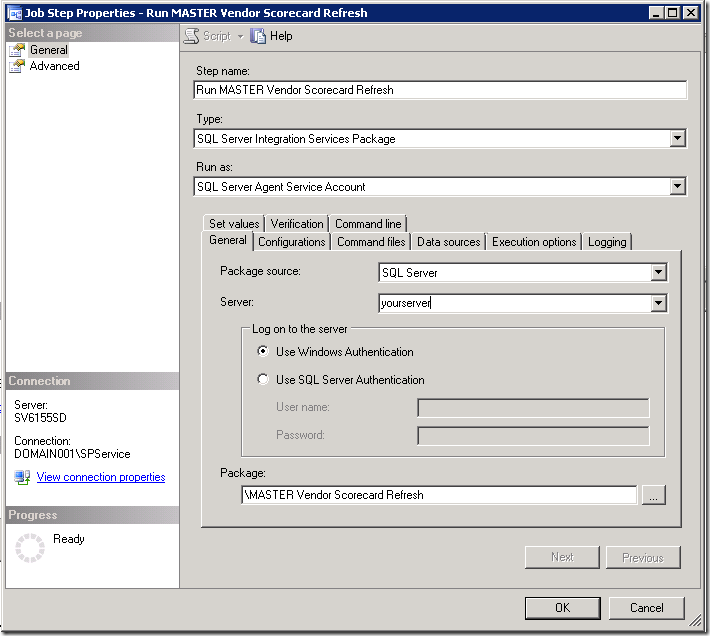

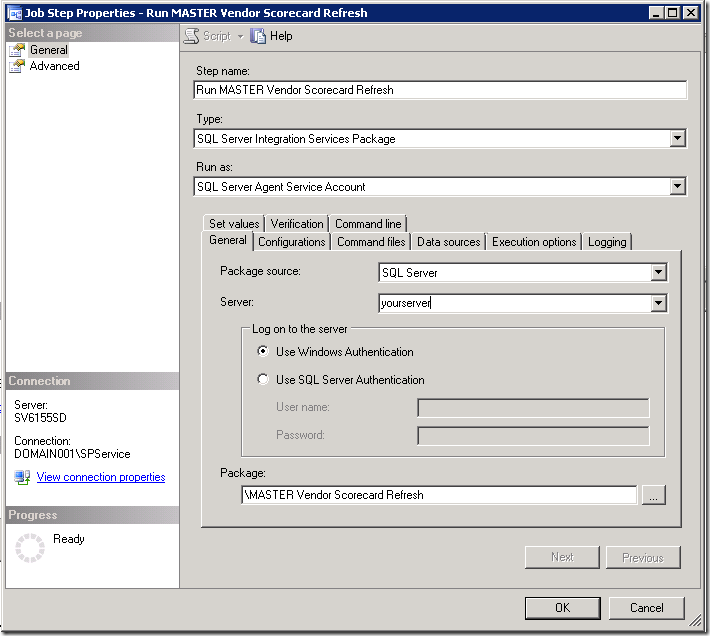

CREATE A SQL SERVER AGENT JOB

Now create a SQL Server Agent Job with one step which triggers the SSIS package you just created. Do not schedule this job, since it will be triggered manually by the user.

You will want to add a notification to email you when the job completes while you are doing your testing. You could change the notification to when the job fails once you know it’s working correctly.

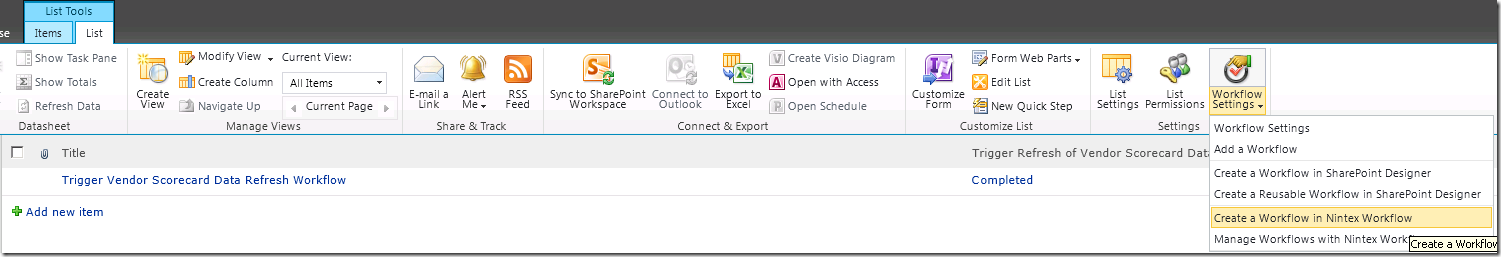

CREATE A SHAREPOINT LIST AND WORKFLOW

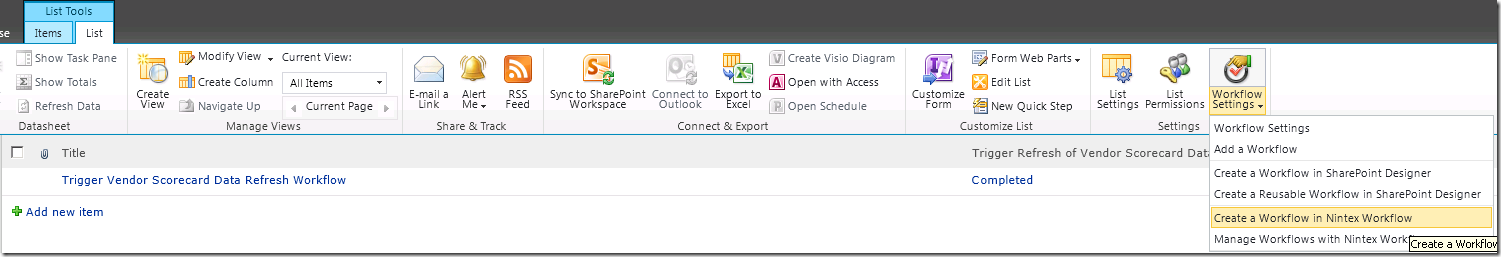

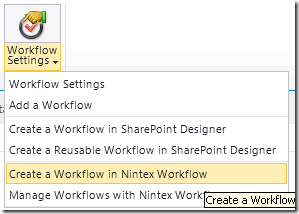

Create a SharePoint list, called Vendor Scorecard Data Refresh. Add one item to the list. You could name the item Trigger Vendor Scorecard Data Refresh Workflow. In your List tool bar select Workflow Settings, Create a Workflow in Nintex Workflow.

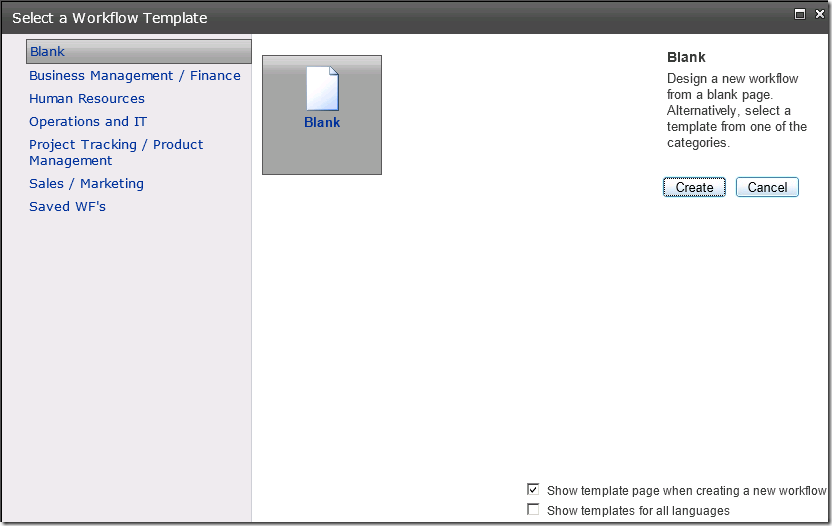

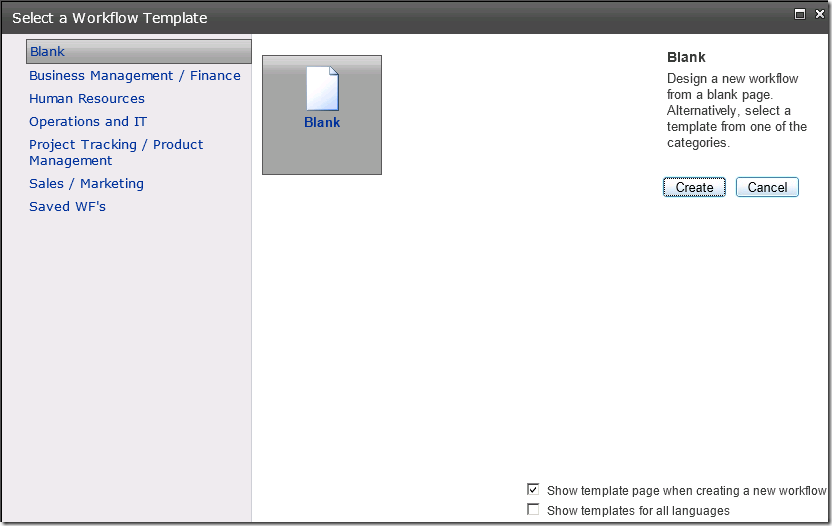

Leave the template as Blank and click the Create button.

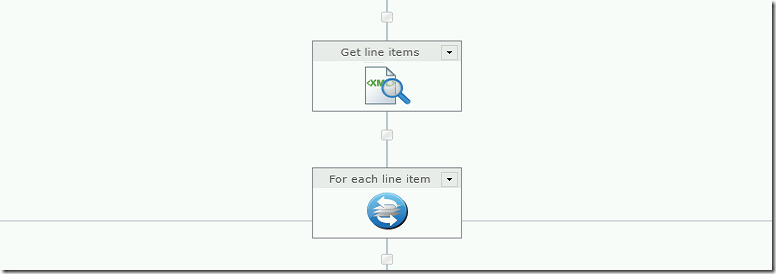

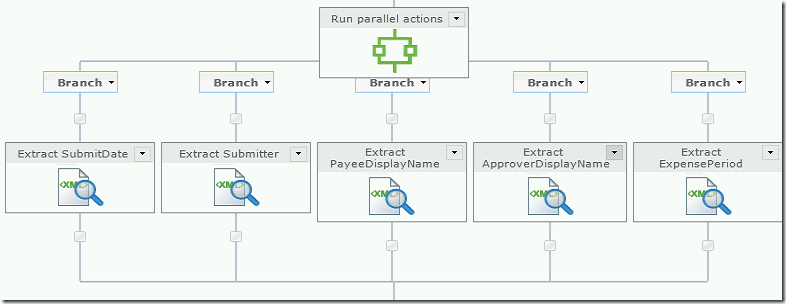

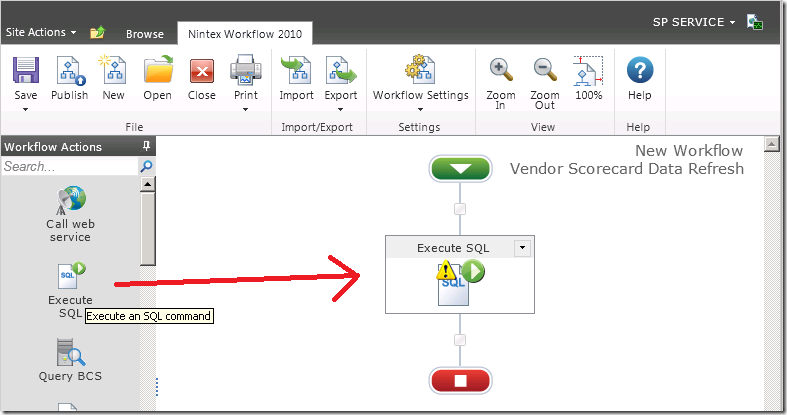

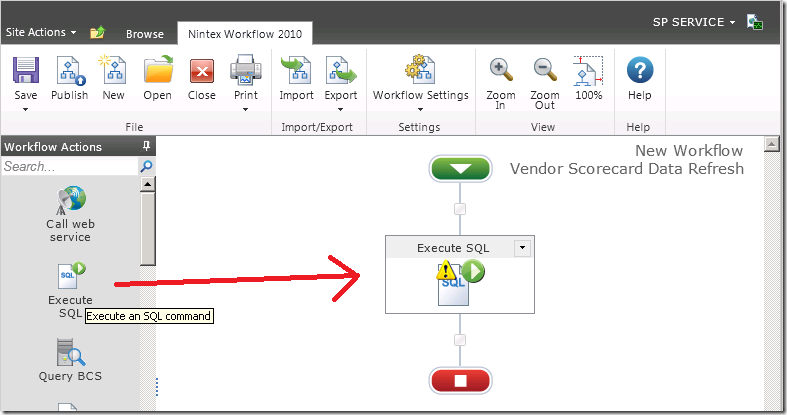

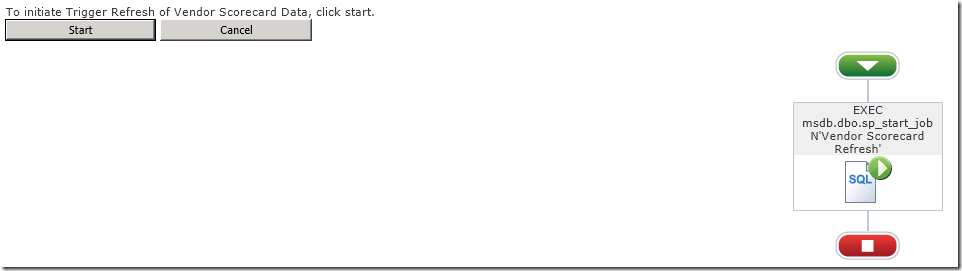

In the bottom left of your screen choose the workflow action type of Integration.

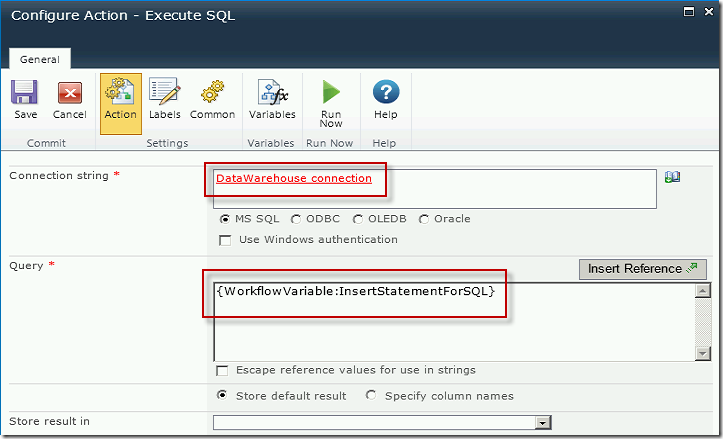

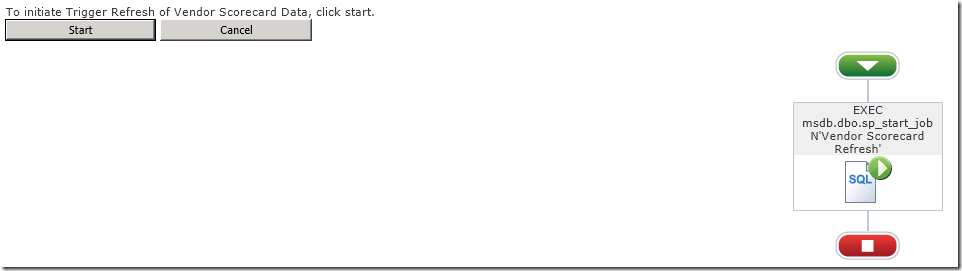

From the resulting actions which appear in the Workflow Actions pane, drag the Execute SQL action onto your workflow.

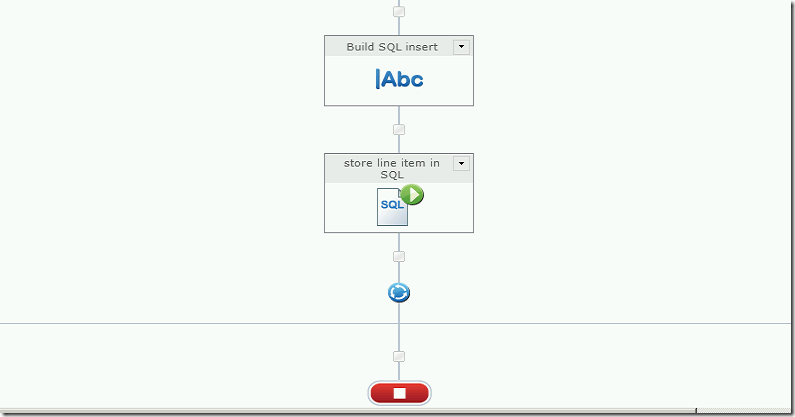

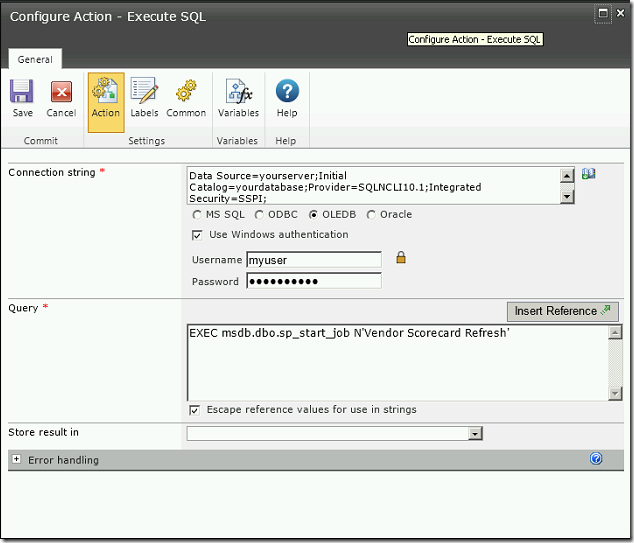

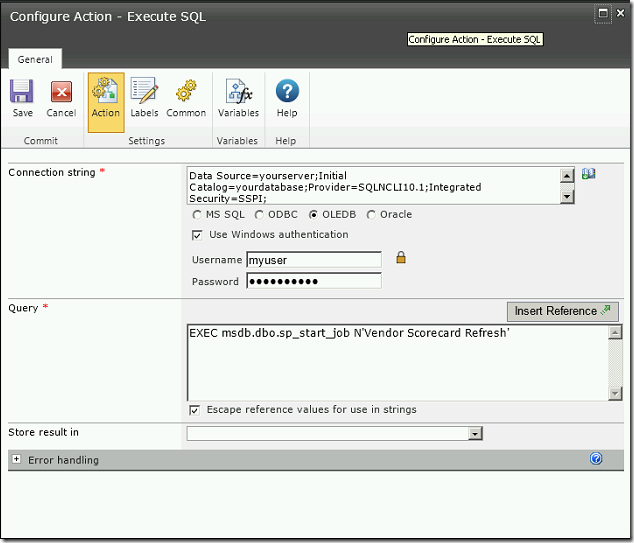

Double click in the center of the Execute SQL icon to configure the action. Select OLEDB, key in your connection string.

Data Source=yourserver;Initial Catalog=yourdatabase;Provider=SQLNCLI10.1;Integrated Security=SSPI;

check the box for Use Windows authentication. Key in the username and password that has permissions to to trigger the SQL Server Agent Job. Enter the query which will trigger the job.

EXEC msdb.dbo.sp_start_job N’Vendor Scorecard Refresh’

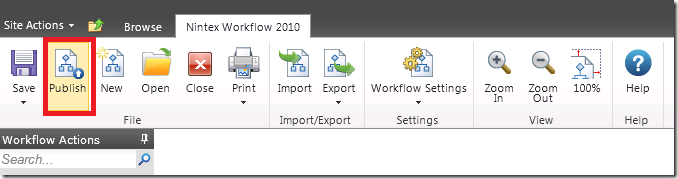

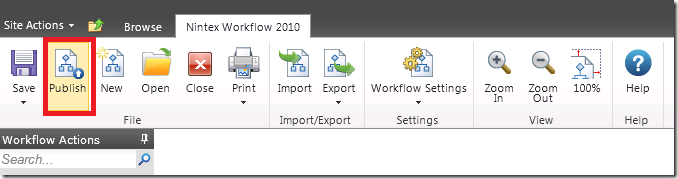

Click on Save to save the action. Publish the workflow by clicking on the Publish button.

TRIGGER THE WORKFLOW MANUALLY

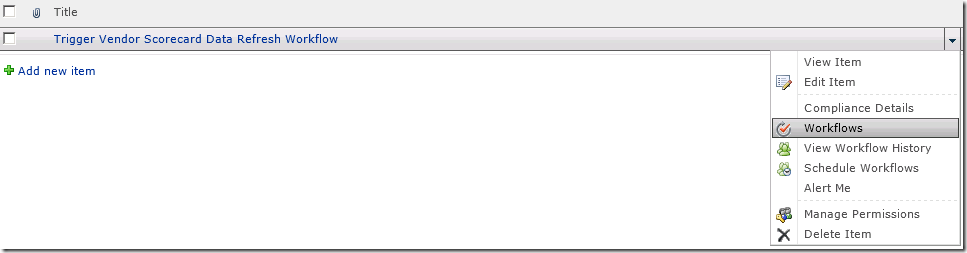

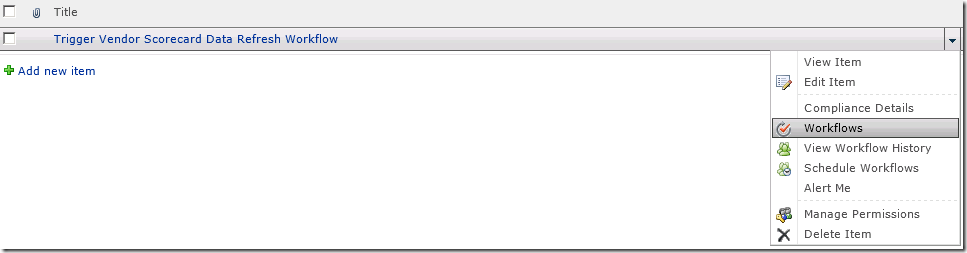

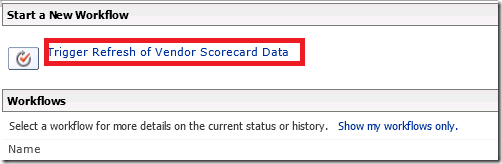

Navigate to your Vendor Scorecard Data Refresh SharePoint list. Click on the dropdown beside your one item and select Workflows.

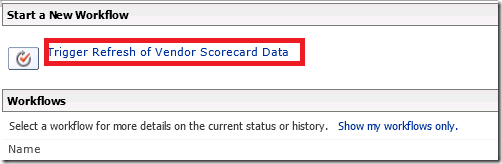

Select the workflow you want to start. You can store more than one workflow on a SharePoint list, but this can get confusing to users so I recommend you keep it to one workflow per SharePoint list unless there is a very good reason to do otherwise.

Click the start button to trigger the workflow.

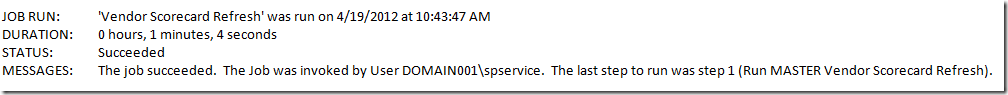

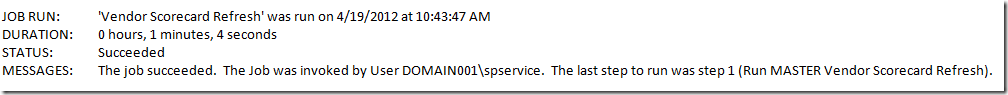

If you set up notifications on the SQL Server Agent job you will receive an email when the job completes. The email will tell you if the job was successful.

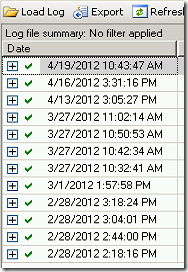

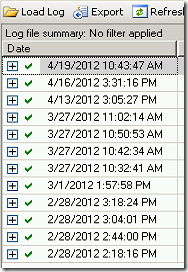

If you did not set up notifications you can check the history of the job. The history will not appear on the job until the job completes, so you will need to wait a few minutes for the steps in your SSIS package to complete before you can check if it ran successfully. In SSMS right click on the SQL Server Agent Job and select View History. You will see a green checkmark beside the date and time if the job ran successfully. If it fails you will see a red X.

That’s it, your done. Users can now trigger their own data updates as required.