This is a neat little trick. My client wanted to filter a report by employee manager, but didn’t have a good way to maintain the list of employees and their managers. There were a few possibilities, but we wanted to use a system that already maintained the relationship between employee and manager. We decided to pull the information out of Active Directory, using an SSIS package.

Addendum Sept. 12, 2012: The approach outlined below works fine for a simple data set where the query will not return more records than the AD paging file size will allow (typically 1000 records), and you are only querying single value attributes. However, I recommend you use this Script Task approach instead of using a Data Flow. It is more powerful and flexible yet still simple method of importing any size data set from Active Directory, regardless of paging file size. Read the step-by-step here: Get around Active Directory Paging on SSIS import

Find the Active Directory Server

The first thing I needed to know was which server Active Directory resided on. I didn’t want to have wait for I.T. to get back to me with that answer. There is a very easy way to find this out if you are logged into the same domain. Just run a command prompt and use the command

ping %USERDNSDOMAIN%

This will return the active directory server name and IP address.

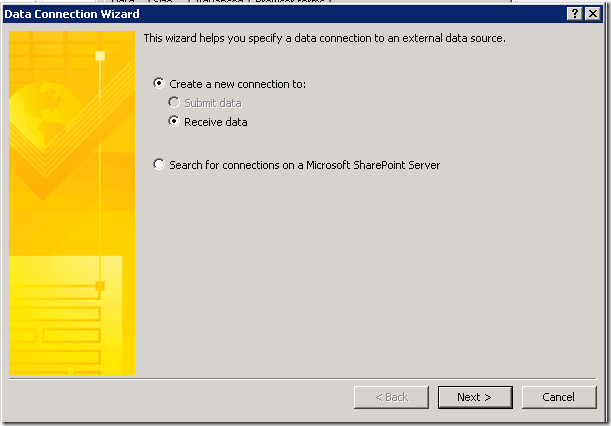

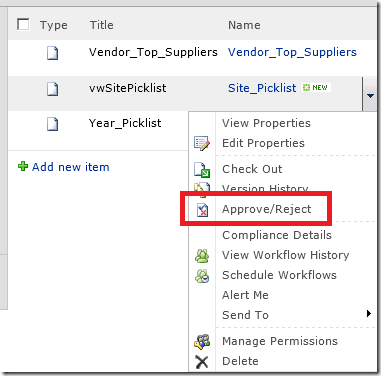

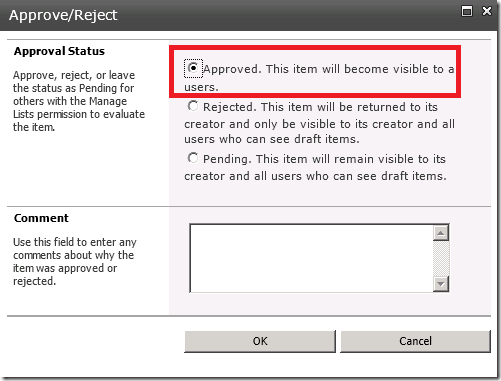

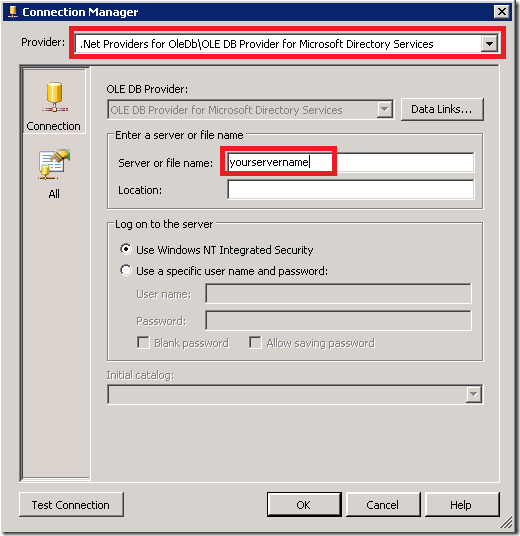

Create the ADO.NET Connection in SSIS

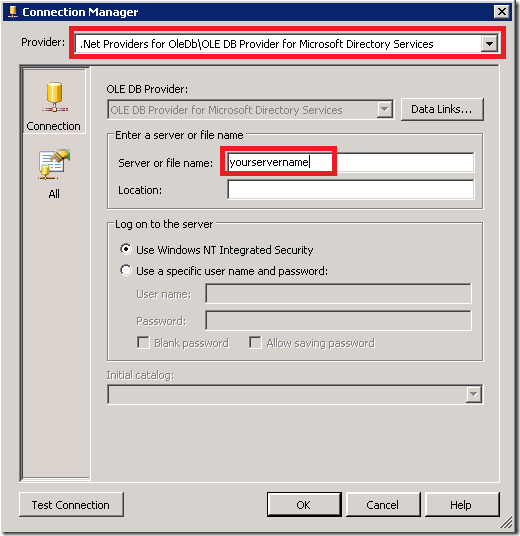

Create your SSIS solution and your package. Now create the connection to the Active Directory server. There is a little trick to this. In order to use the connection in a data flow you need to create an ADO.NET connection. During the create process, in the Provider drop down, change the Provider to OLE DB Provider for Microsoft Directory Services. Then enter your server name and test as normal. If you were to create an OLE DB connection instead of ADO.NET and change the provider, it would not work for Data Flow connections. It must be an ADO.NET connection type.

Use ADO NET Source in your Data Flow

On the data flow tab, drag an ADO.NET Source onto the design surface. Open up the ADO.NET Source Editor and choose the connection you created in the previous step. Choose “SQL Command” for Data Access mode. Now you will write a query to pull the desired information from Active Directory. Your query will look something like this:

SELECT displayName, Mail, Title, physicalDeliveryOfficeName, telephoneNumber, Manager, name, sAMAccountName

FROM ‘LDAP://yourservername’

WHERE objectClass=’user’

or this, depending on how you like to structure your LDAP queries.

<LDAP://yourservername>; (objectClass=user); displayName, Mail, Title, physicalDeliveryOfficeName, telephoneNumber, Manager, name, sAMAccountName

You must put single quotes around the server. For more information on what attributes are available to be imported from Active Directory I found this to be helpful: http://www.kouti.com/tables/userattributes.htm.

You can test your query in SSMS like this:

SELECT displayName, Mail, Title, physicalDeliveryOfficeName, telephoneNumber, Manager, name, sAMAccountName

FROM Openquery (adsi, ‘SELECT displayName, Mail, Title, physicalDeliveryOfficeName, telephoneNumber, Manager, name, sAMAccountName

FROM “LDAP://yourservername” WHERE objectClass = ”user” ‘)

For this SQL Query to work you will need to add your AD server as a linked servier on your SQL server and name it ADSI. In SSMS open up Server Objects and right click on Linked Servers. Select New Linked Server. On the General tab select “OLE DB Provider for Microsoft Directory Services” as the Provider. Type in your AD server name in the Product Name and the Data source. Leave the other fields blank and click OK.

When running your query, if you get an error like this: “Cannot fetch a row from OLE DB provider “ADsDSOObject” for linked server “adsi”, there is a good chance your query is returning more rows than the AD paging file size allows. In that case you can do one of two things: 1) filter your query further to return fewer rows (try filtering on a specific sAMAccountName to be sure that the query syntax isn’t the problem) or 2) use a C# script which is not affected by paging file size as outlined here Get around Active Directory Paging on SSIS import .

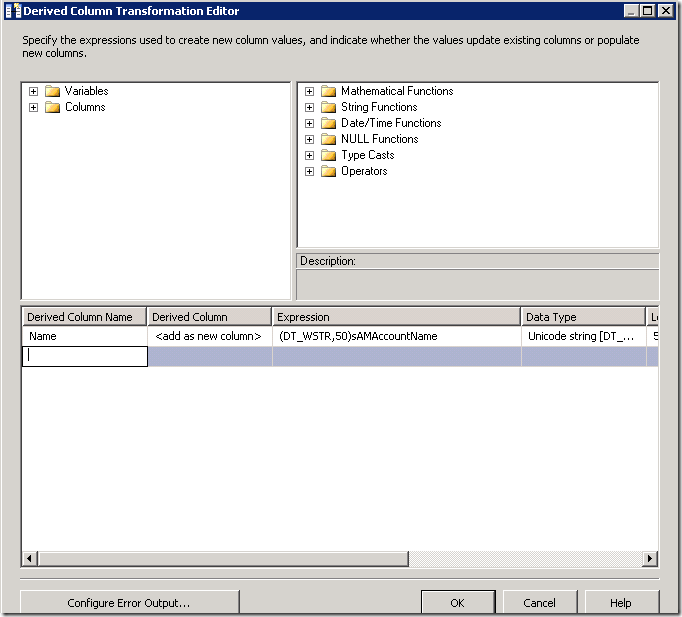

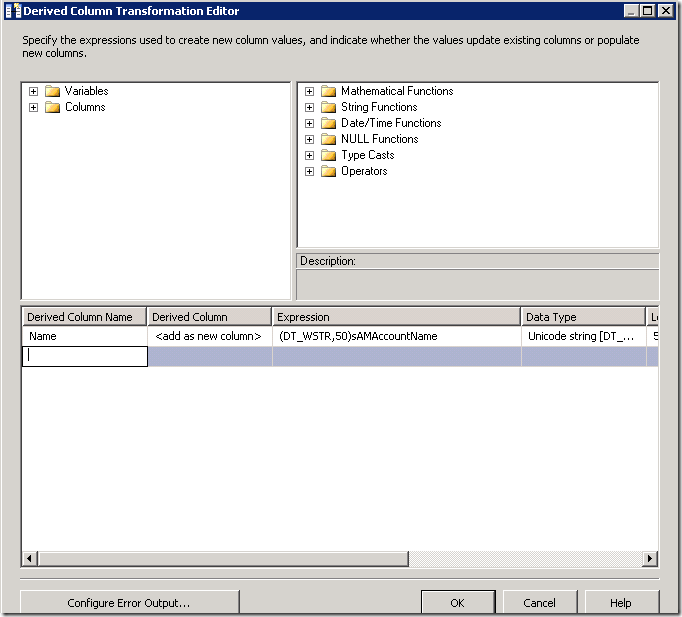

Data Formatting

Each field will be of NTEXT type. You can leave them as NTEXT if this is an acceptable format for your destination, or you can add Derived Columns which convert the values in an expression, like this:

(DT_WSTR,50)sAMAccountName

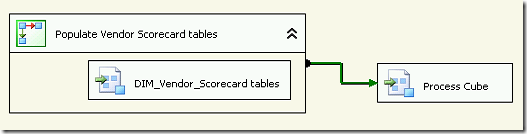

Add your Destination

Add your data flow destination and map the fields as you wish. In this case I created a table in the data warehouse to receive the Active Directory data.

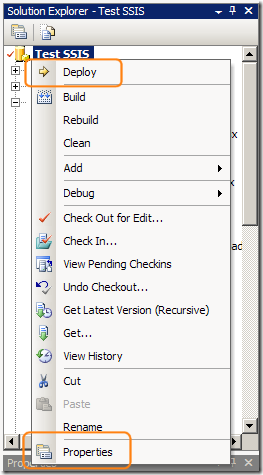

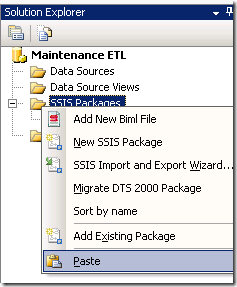

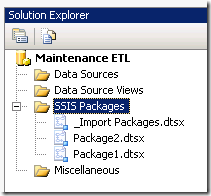

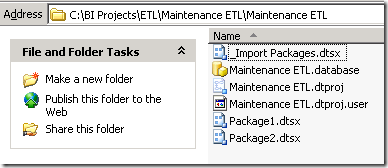

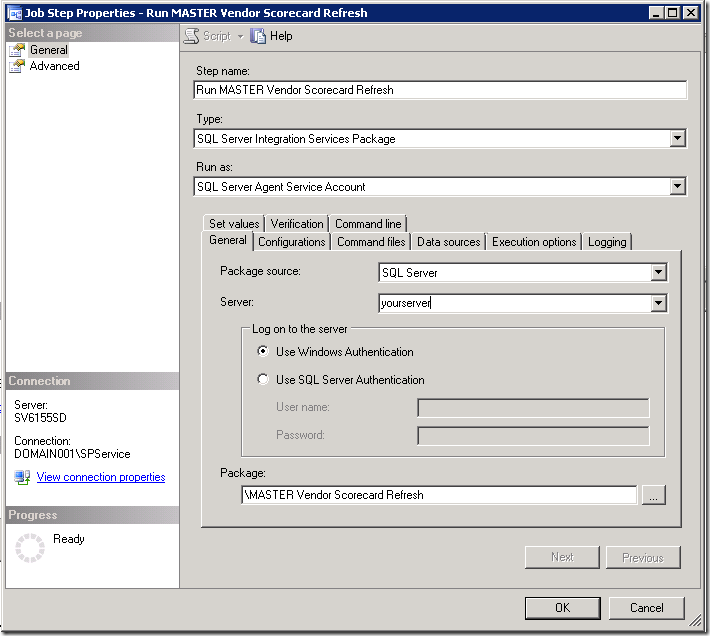

You can now finish your package and deploy it. This is a simple but very powerful method. I found it very useful and I know I’m going to be using it again.

Addendum Sept. 12, 2012: This approach works fine for a simple data set where the query will not return more records than the AD paging file size will allow (typically 1000 records), and you are only querying single value attributes. However, I recommend you use this Script Task approach instead of using a Data Flow. It is more powerful and flexible yet still simple method of importing any size data set from Active Directory, regardless of paging file size. Read the step-by-step here: Get around Active Directory Paging on SSIS import

Credits

In preparing this post, I found the following articles to be useful:

http://social.technet.microsoft.com/wiki/contents/articles/processing-active-directory-information-in-ssis.aspx

http://www.kouti.com/tables/userattributes.htm