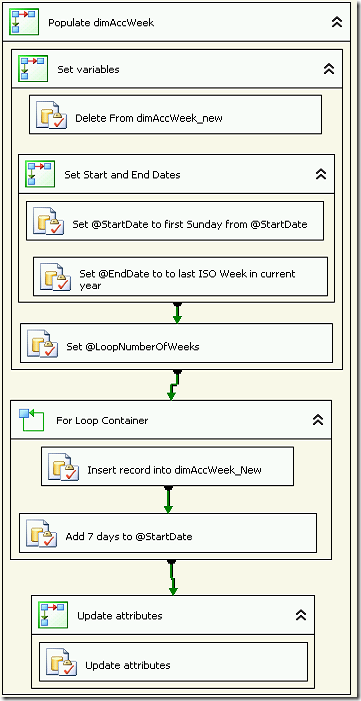

I normally use dimDate tables in my projects, but for this client it made sense to have this at the week level. Rather than sticking with my usual dimDate stored procedure to build out the table and attributes, I thought I’d take the opportunity to migrate the logic over to an SSIS package. Here is a quick overview of how it works.

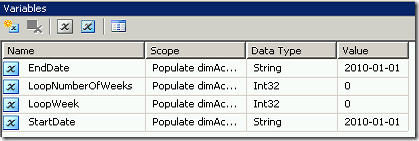

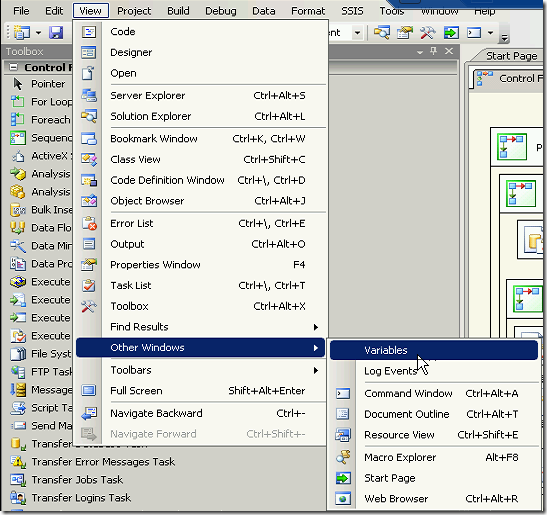

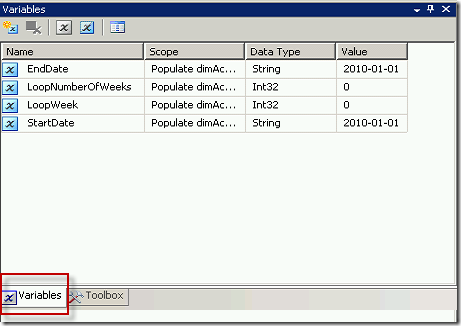

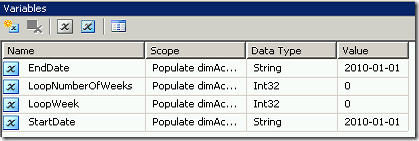

In my SSIS package I created 4 variables. Three of these variables will be populated by the package. Only the StartDate needs to be populated by the user. In this case the first date to be populated in the table is the first day of 2010.

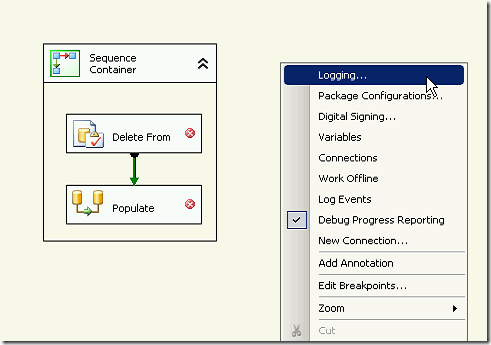

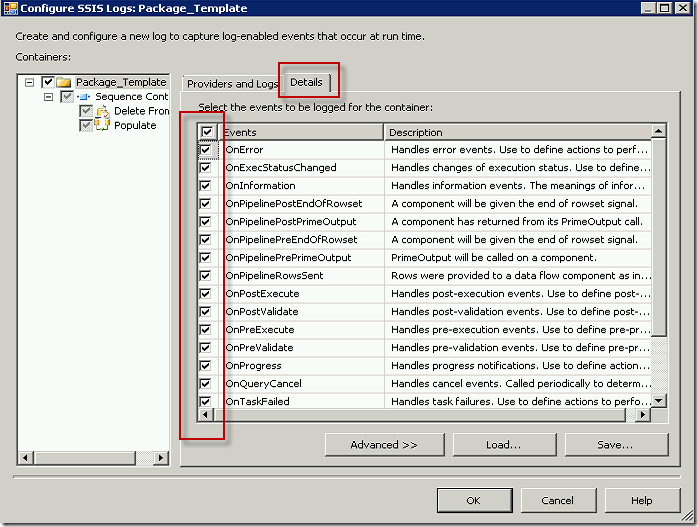

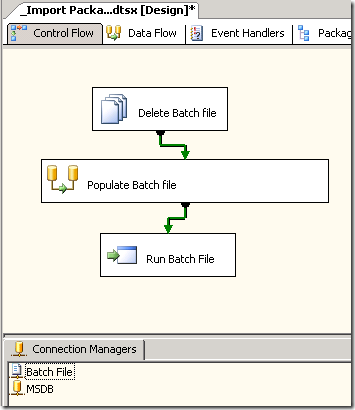

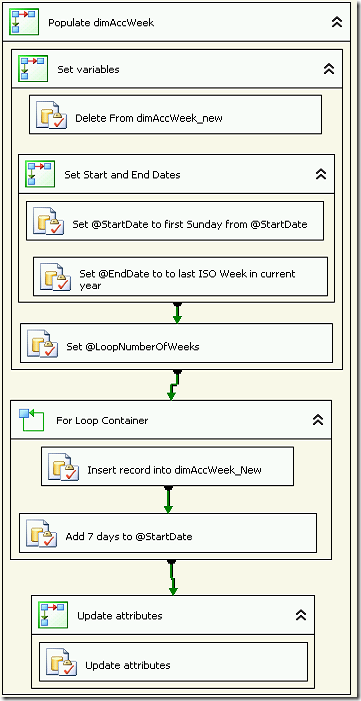

The tasks are fairly straightforward and the finished package looks like this.

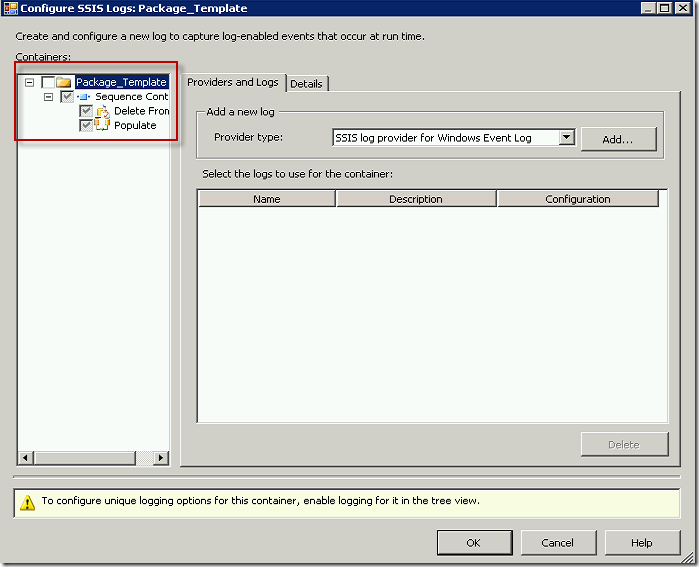

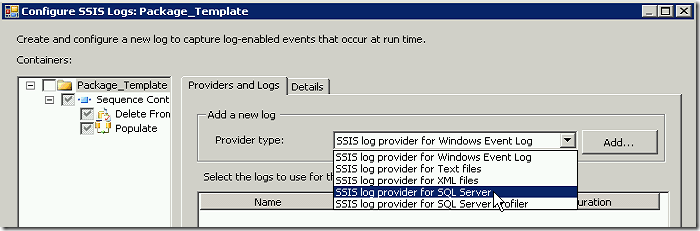

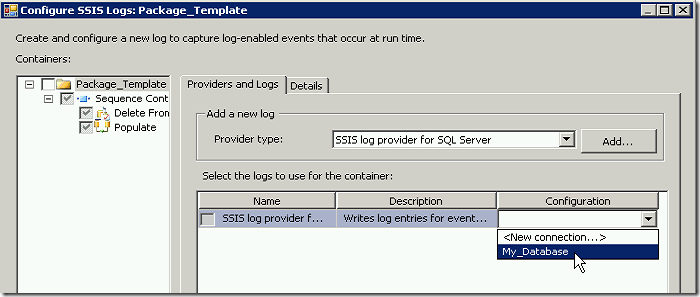

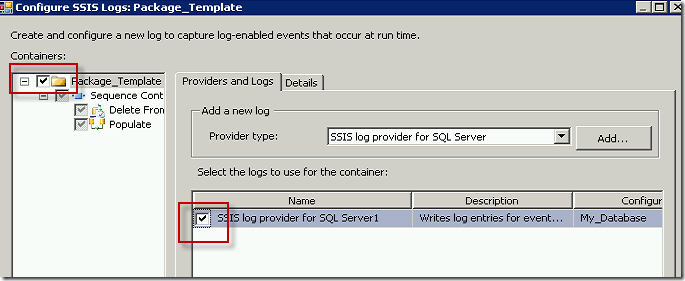

I wanted to blog certain parts of this, largely so I can remember them the next time I do this.

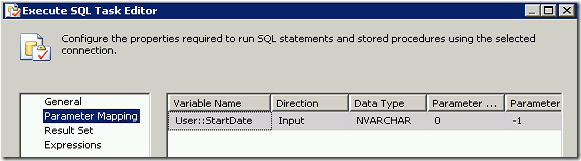

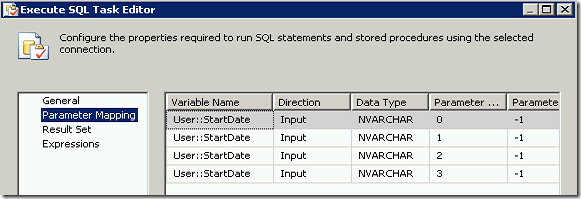

I will lay out the important SQL Statements used in these steps. For specifics on how to set up variables and tasks using variables you can check out this post How to Set and Use Variables in SSIS Execute SQL Task

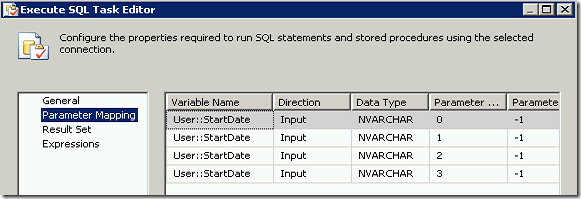

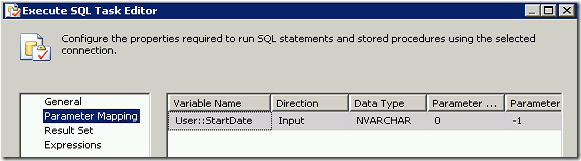

Set @StartDate to first Sunday from @StartDate

This task is in place to ensure that the StartDate is set to the correct day of the week, as required by the business. This client has the start of their business week on Sunday. If the user has entered a date into the package variable which is not a Sunday, this task will set the @StartDate to the nearest Sunday after the date in the variable. The fact that the SQL Server DATEPART ISOWW starts on a Monday will be addressed later in this post. The CAST statement is added to allow the results to be inserted into the @StartDate string variable.

SELECT

CAST(

CASE WHEN DATENAME(dw,?) = ‘Sunday’ THEN ? –@StartDate

ELSE DATEADD(d,8-DATEPART(dw,?),?) END

as nvarchar) as NewStartDate

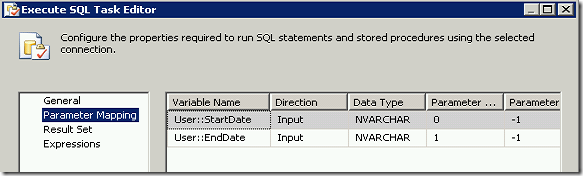

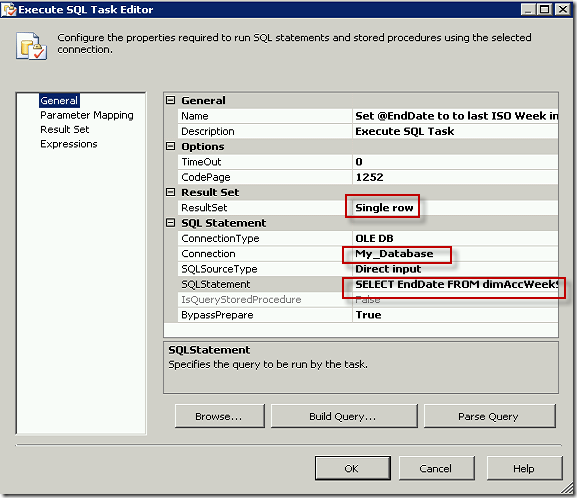

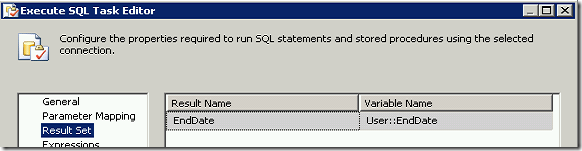

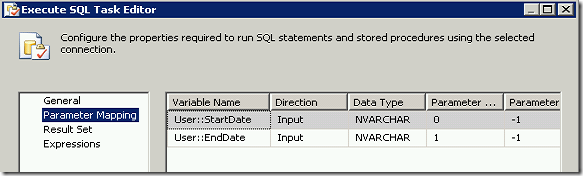

Set @EndDate to to last ISO Week in current year

The client wants the table to contain weeks up to the last week of the current ISO year. When we move into next year, the new year will automatically be added. You can edit this statement to meet your business requirements. The CAST statement is added to allow the results to be inserted into the @EndDate string variable.

SELECT

CAST(

–Check to see if current year is 52 or 53 weeks

CASE WHEN DATEPART(ISOWW,DATEADD(WEEK,53 – DATEPART(ISOWW,GETDATE()),GETDATE())) = 1

THEN DATEADD(WEEK,52 – DATEPART(ISOWW,GETDATE()),GETDATE())

ELSE DATEADD(WEEK,53 – DATEPART(ISOWW,GETDATE()),GETDATE())

END

as nvarchar)

as EndDate

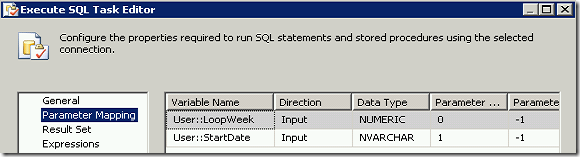

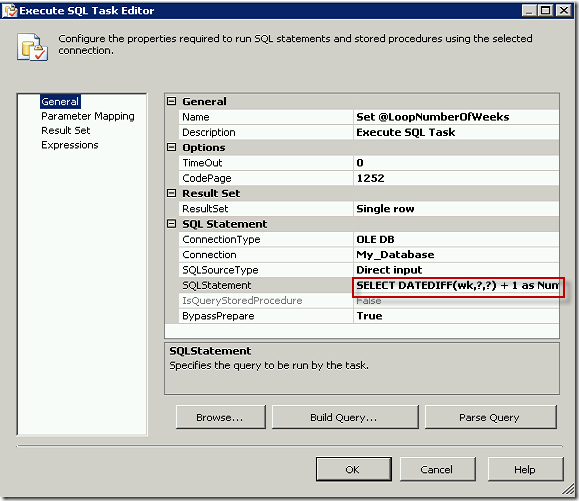

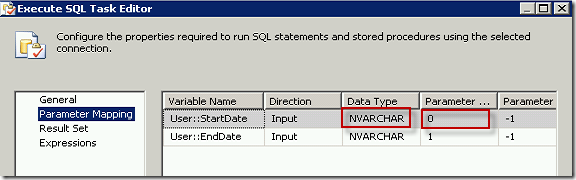

Set @LoopNumberOfWeeks

This will find the number of weeks between the StartDate and the EndDate for use in the For Loop Container. I added 1, because I chose to start my Loop at 1 rather than 0.

SELECT DATEDIFF(wk,?,?) + 1 as NumberOfWeeks

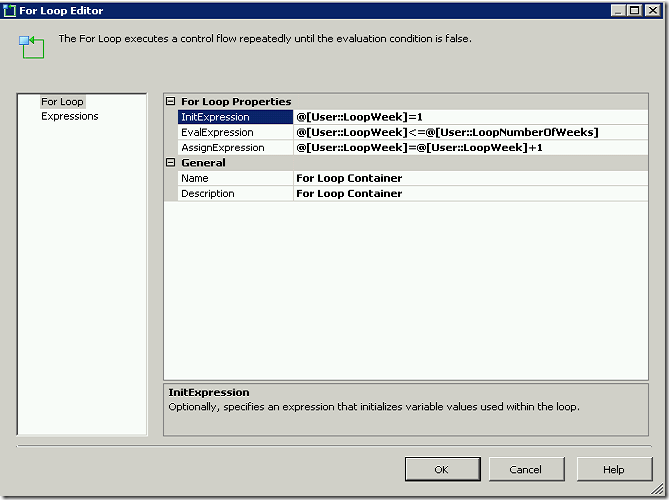

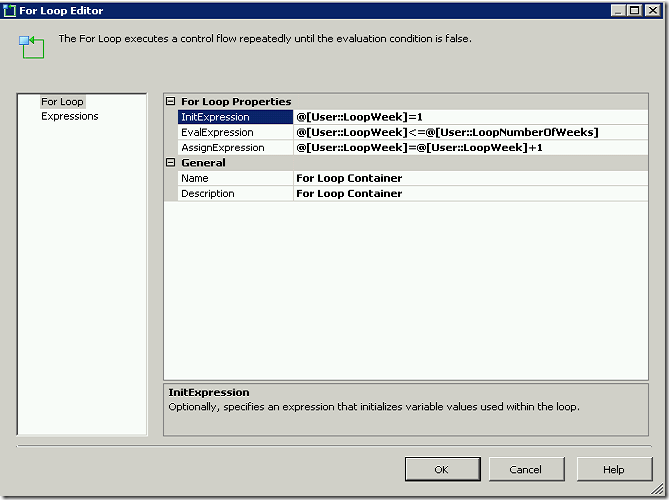

For Loop Container

The container is set to look through the number of weeks calculated in the Set @LoopNumberOfWeeks task. Note that the InitExpression is 1 instead of 0. I am using the @LoopWeek variable to populate one of the fields in my table, which is why I wanted to start with 1.

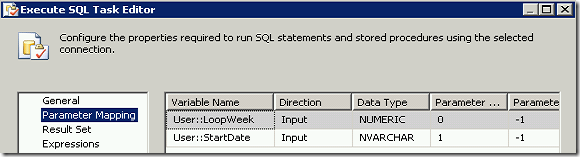

Insert record into dimAccWeek_New

INSERT INTO [dimAccWeek_new]

([AccWeekSK],

[AccWeekStartDate])

SELECT

? as AccWeekSK,

? as AccWeekStartDate

Add 7 days to @StartDate

Instead of using the loop to move the date forward one week, I am updating the variable with an Execute SQL Task inside the loop. The CAST statement is added to allow the results to be inserted into the @StartDate string variable.

SELECT

CAST(

DATEADD(dd,7,?)

as nvarchar) as LoopWeek

Update attributes

Here is the meat of it. When using the DATEPART ISOWW on their SQL Server, the ISO Week starts on a Monday. Since business week starts on a Sunday I am adding 1 day when calculating where it falls within a Week, Quarter, Year

UPDATE dimAccWeek_new

SET AccWeekSK = CASE

–When date is > the first week and date between Jan 1 and 3 then PrevYr + ISOWeek

WHEN DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate)) > 1

AND DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate)) BETWEEN CAST(DATEPART(Year,AccWeekStartDate) as varchar) + ‘0101’

AND CAST(DATEPART(Year,AccWeekStartDate) as varchar) + ‘0103’

THEN CAST(DATEPART(Year,AccWeekStartDate)-1 AS varchar) + RIGHT((’00’ + CAST(DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate)) as varchar)),2)

–When date = the first week and date between Dec 29 and Dec 31 then NextYr + ISOWeek

WHEN DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate)) = 1

AND AccWeekStartDate

BETWEEN CAST(DATEPART(Year,AccWeekStartDate) as varchar) + ‘1229’

AND CAST(DATEPART(Year,AccWeekStartDate) as varchar) + ‘1231’

THEN CAST(DATEPART(Year,AccWeekStartDate)+1 AS varchar) + RIGHT((’00’ + CAST(DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate)) AS varchar)),2)

–ELSE CurrentYr + ISOWeek

ELSE CAST(DATEPART(Year,AccWeekStartDate) AS varchar) + RIGHT((’00’ + CAST(DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate)) AS varchar)),2)END

,AccYear = CASE

–When date is > the first week and date between Jan 1 and 3 then PrevYr

WHEN DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate)) > 1

AND DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate)) BETWEEN CAST(DATEPART(Year,AccWeekStartDate) as varchar) + ‘0101’

AND CAST(DATEPART(Year,AccWeekStartDate) as varchar) + ‘0103’

THEN CAST(DATEPART(Year,AccWeekStartDate)-1 AS varchar)

–When date = the first week and date between Dec 29 and Dec 31 then NextYr

WHEN DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate)) = 1

AND AccWeekStartDate

BETWEEN CAST(DATEPART(Year,AccWeekStartDate) as varchar) + ‘1229’

AND CAST(DATEPART(Year,AccWeekStartDate) as varchar) + ‘1231’

THEN CAST(DATEPART(Year,AccWeekStartDate)+1 AS varchar)

–ELSE CurrentYr

ELSE CAST(DATEPART(Year,AccWeekStartDate) AS varchar) END

,AccQuarter = CASE

WHEN DATEPART(mm,AccWeekStartDate) BETWEEN 1 AND 3 THEN 1

WHEN DATEPART(mm,AccWeekStartDate) BETWEEN 4 AND 6 THEN 2

WHEN DATEPART(mm,AccWeekStartDate) BETWEEN 7 AND 9 THEN 3

ELSE 4 END

,AccMonth = DATEPART(mm,AccWeekStartDate)

,AccWeek = DATEPART(ISOWW,DATEADD(dd,1,AccWeekStartDate))

,AccWeekEndDate = DATEADD(dd,6,AccWeekStartDate)

You can add as many attributes as you require. This should get you started.